Click here to visit Original posting

Welcome to our coverage of Nvidia GTC 2025!

The event has already seen a packed opening keynote from Nvidia CEO Jensen Huang, who revealed a host of new hardware and AI tools - along with a few surprises too.

But if there's anything you think might have missed, don't worry - we've got you covered!

- Nvidia is dreaming of trillion-dollar datacentres with millions of GPUs and I can't wait to live in the Omniverse

- Nvidia has updated its virtual recreation of the entire planet - and it could mean better weather forecasts for everyone

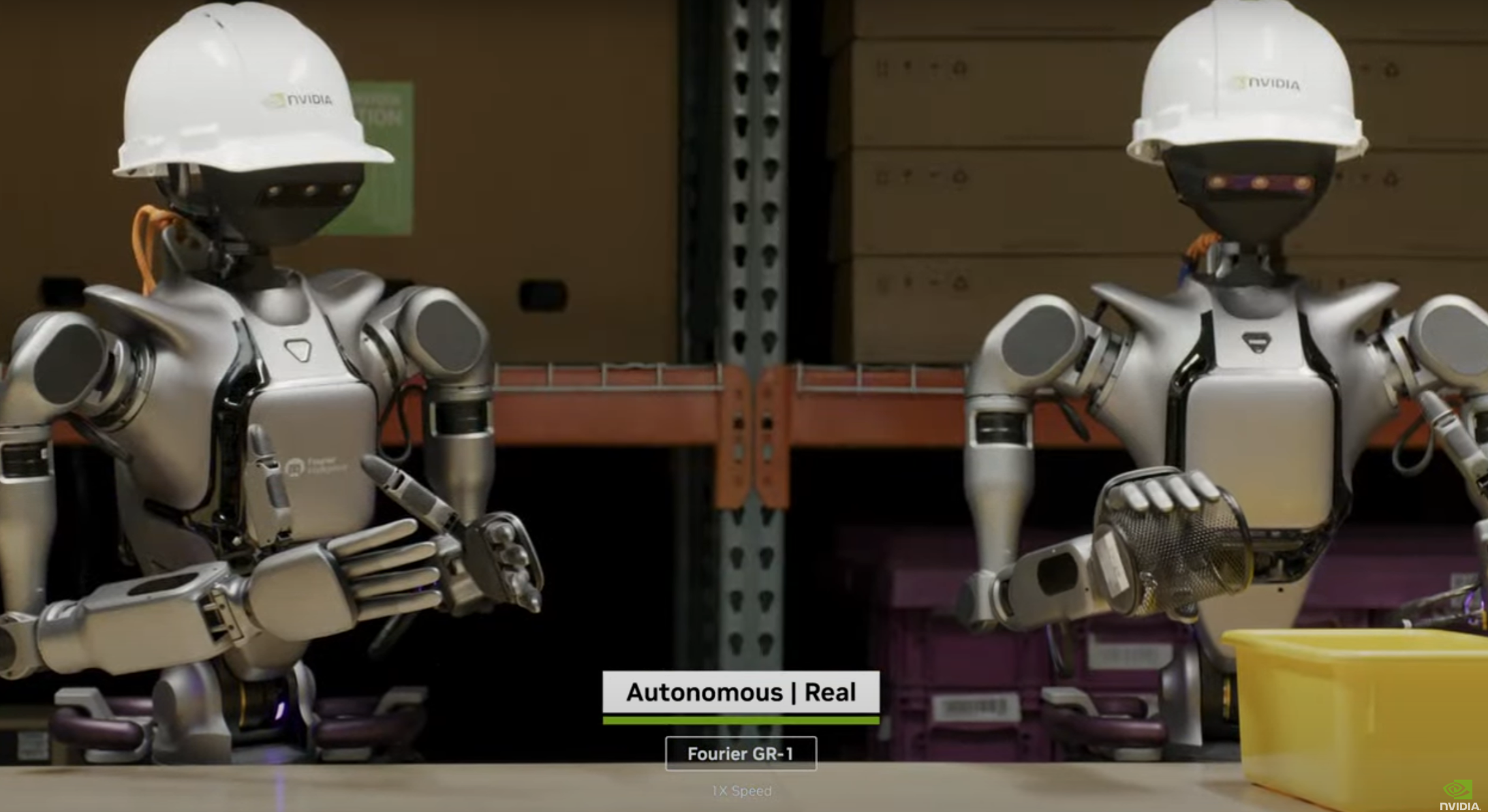

- “The age of generalist robotics is here" - Nvidia's latest GROOT AI model just took us another step closer to fully humanoid robots

- New Nvidia Blackwell Ultra GPU series is the most powerful AI hardware yet

- Nvidia’s DGX Station brings 800Gbps LAN, the most powerful chip ever launched in a desktop workstation PC

- Nvidia launches its fastest GPU ever: Nvidia RTX Pro 6000 Blackwell Workstation Edition is an enhanced version of the RTX 5090 with more of everything

Good morning and welcome to our live coverage of the Nvidia GTC 2025 keynote!

We're super excited to see what Nvidia has in store for us today, with company CEO and founder Jensen Huang set to take to the stage in a few hours time.

We're not far off the opening keynote at Nvidia GTC 2025 now, so what can we expect?

Last year's keynote saw the reveal of Blackwell, the company's new generation of GPUs, and we're expecting another major hardware update today.

The company also unveiled a host of new data center hardware, and we're expecting more data center, server and workstation news today for sure.

But there was also a big focus on robotics, particularly in factories, and the role AI can play there, so it may well be we see more of the same today.

If you want to watch along with the keynote, you'll need to head to the Nvidia GTC 2025 website, where you can sign up.

You've not got long though - Jensen Huang will be on stage in just a few hours time!

Less than half an hour to go! Get some snacks and energy drinks ready, this could be a long one...

Also, make sure to keep an eye out for Jensen Huang's leather jacket - the Nvidia CEO is always snappily-dressed, and jacket-watch has become a popular trend for us media types - it's important to look good when you're presenting the future of AI, you know...

Here we go! The lights go down and it's time for the keynote to begin...

"This is how intelligence is made - this is a whole new factory," an intro video outlining "endless possibilities" notes.

We're shown a number of possible use cases for the future of AI, from weather forecasting to space exploration to curing disease - all powered by tokens.

"Together, we take the next great leap," the video ends, showing a view of Nvidia's futuristic San Jose HQ.

The video ends, and we welcome Jensen Huang, CEO and co-founder of Nvidia, to the stage.

"What an amazing year...we have a lot of amazing things to talk about" he declares, ushering us in to the virtual Nvidia HQ via virtual reality.

Huang admits he's doing this keynote without a script - brave!

Huang starts by commemorating 25 years of GeForce - a huge lifespan for any technology - holding up one of the newest Blackwell GPUs.

"AI has now come back to revolutionize computer graPhics," he declares, showing us a stunning real-time generated AI video backdrop.

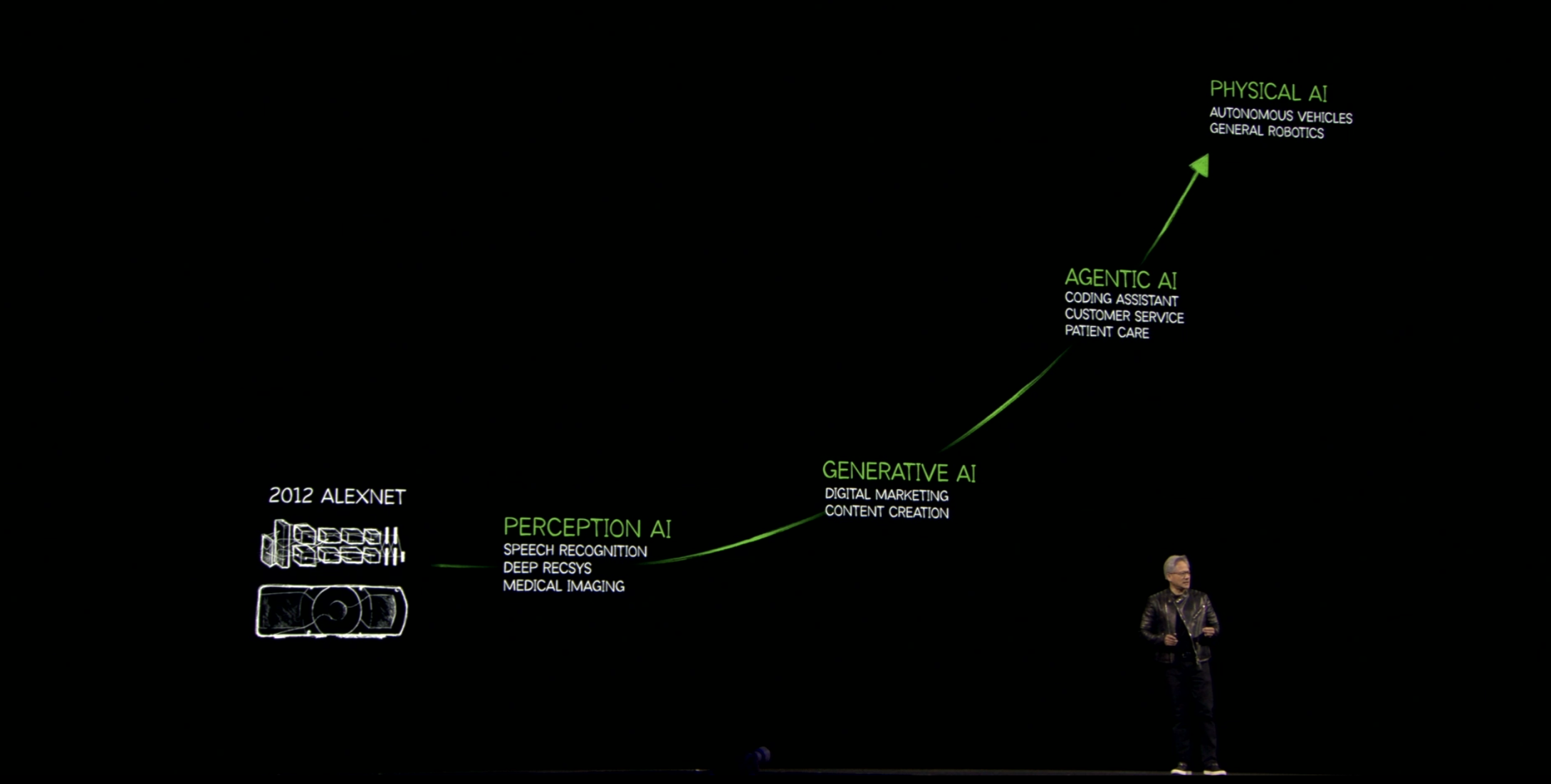

"Generative AI has changed how computing is done," Huang notes, describing a move from retrieval-based working to a generation-based one.

"Every single layer of computing has been transformed."

And now - agentic AI is what we're living in right now, with improved reasoning, solving and planning actions.

The next step is "physical AI" he notes - robotics!

But there's plenty of time for that later, Huang teases....

GTC is "the superbowl of AI", Huang claims - laughing that the only way he could fit more people in to the event is to grow the host city of San Jose...

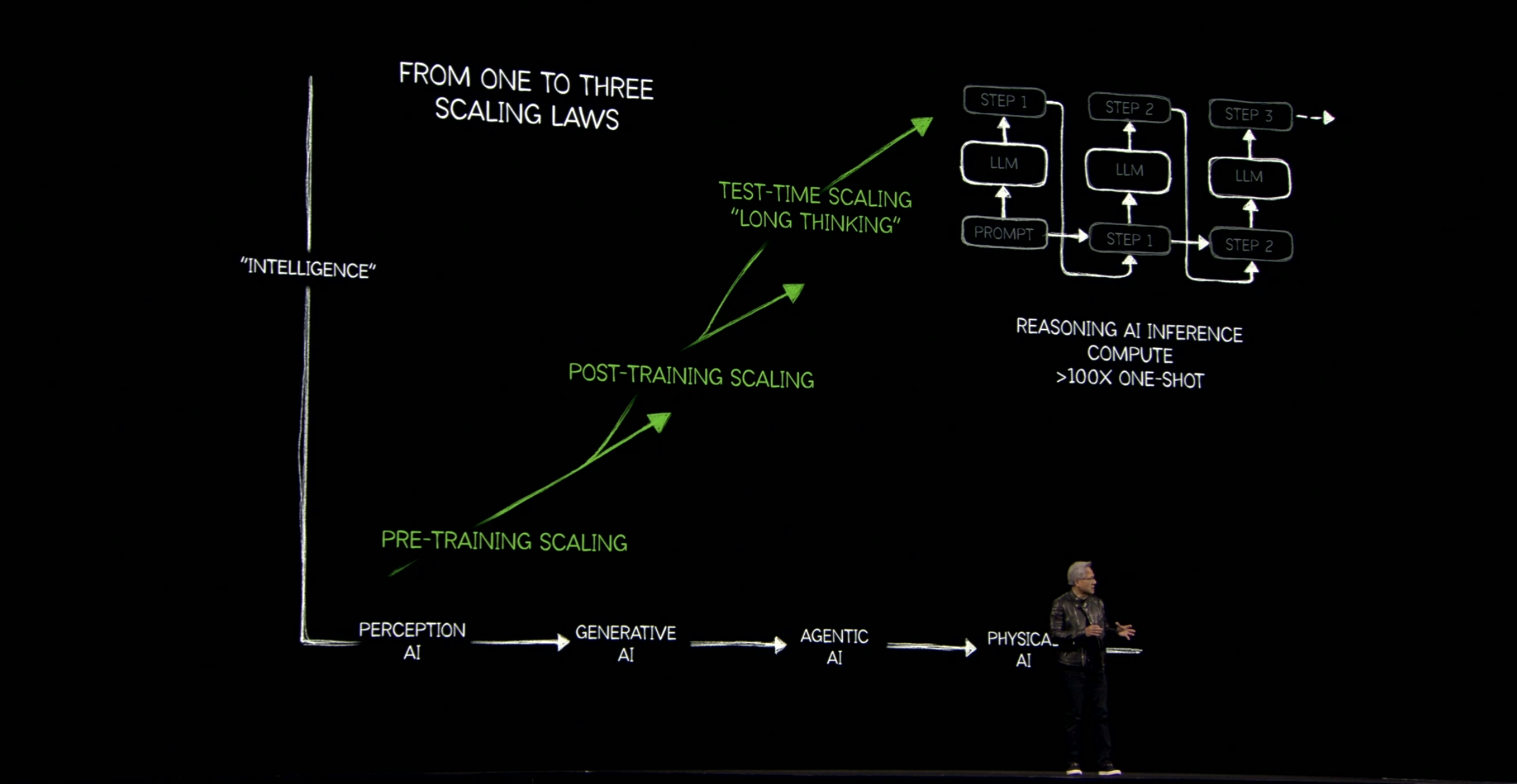

The next step of AI will be enabled by improved training and knowledge work, Huang notes, teaching models and AIs to become smarter and more efficient.

Last year, we all got it wrong, he says, as the scaling potential of AI is greater than (almost) anyone could have predicted.

"It's easily a hundred times more than we thought we needed, this time last year," he notes.

The ability of AI to reason can be the next big breakthrough, Huang notes, with the increased availability of tokens a major part of this.

Teaching the AI how to reason is a challenge though - where does the data come from? There's only so much we can find...reinforcement learning could be the key though, helping an AI solve a problem step-by-step, even when it knows the answer.

For those of you on leather jacket watch, it's a smooth, stylish number this year - anyone want to take a guess at the price?

Huang notes AI is going through an inflection point, as it becomes smarter, more widely-used and got more resources to power it.

He expects data center build-out to reach over a trillion-dollars before long, as demand for AI and a new computing approach gets higher.

The world is going through a platform shift, he notes, shifting from human-made software to AI-made.

"It's a transition in the way we do computing," he notes.

"The computer has become the generator of tokens, not a retriever of files," he declares - as data centers turn into what Huang calls AI factories, with one job only - generating tokens which are then turned into music, words, research and more.

Seeing it up close, the fonts and images used in the keynote look a little...off? Are they AI-generated?

If so, they look pretty perfect, without the usual mistakes you'd see in AI-generated content...have a look at the below and see what you think.

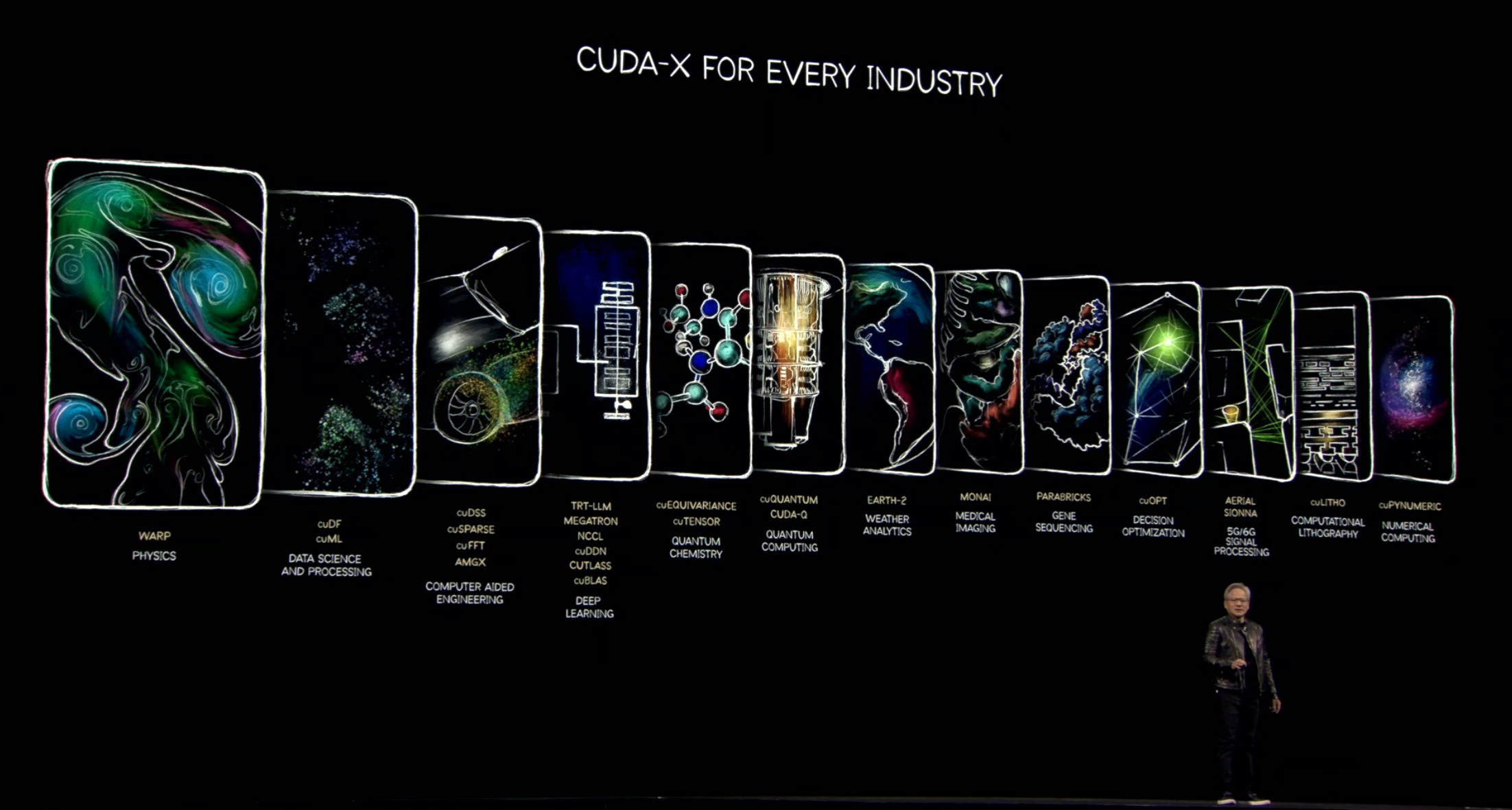

Huang runs us through the entire CUDA library in some detail (and some enthusiasm too) - it's clear that Nvidia has an AI tool for everything from quantum chemistry to gene sequencing.

"We've now reached the tipping point of computing - CUDA made it possible," Huang notes, introducing a video thanking everyone who made this progress possible - around 6 million developers across 200 countries.

"I love what we do - and I love even more what you do with it!" Huang declares.

Right - time for some AI (if we hadn't talked about it enough already)

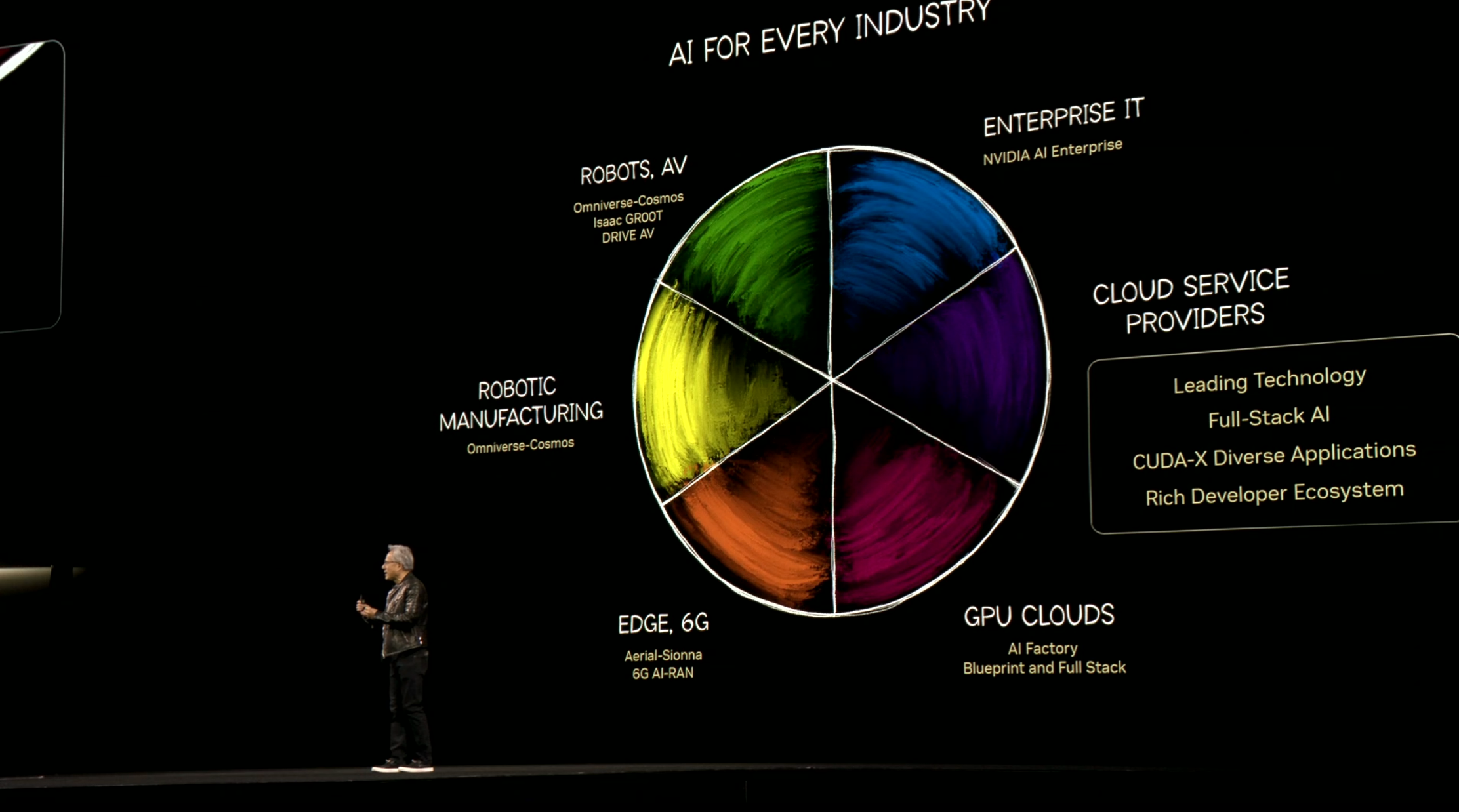

"AI will go everywhere," Huang notes, showing off a look at the various areas we'll be focusing on today.

But how do you take AI global when there are so many differences in platforms, demands and other aspects across industries, across the world?

Huang notes that context and prior knowledge can be the key to the next step forward, especially in edge computing.

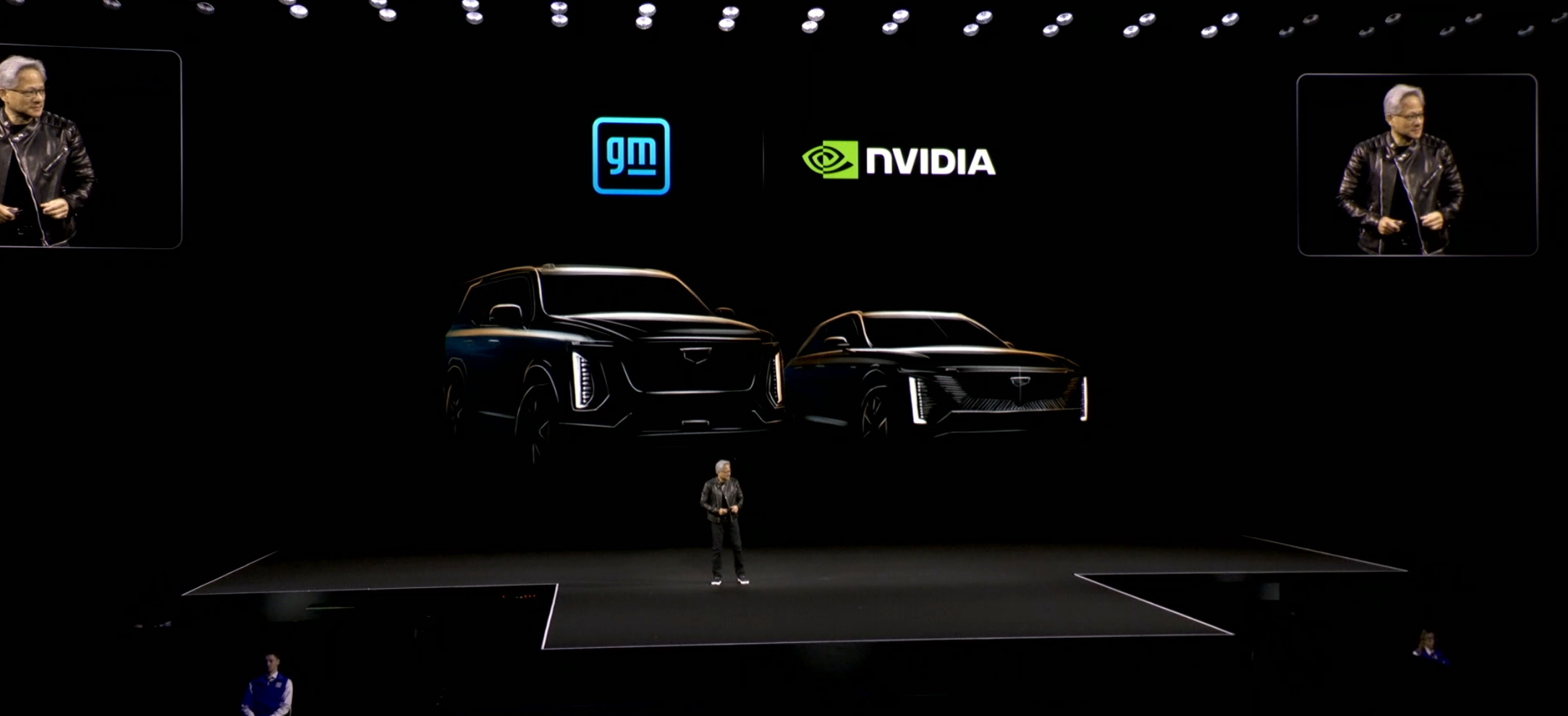

Huang turns to autonomous vehicles (AVs) - often one of the biggest areas when it comes to AI.

He notes almost every self-driving car company uses Nvidia tech, from Tesla to Waymo, from software to hardware, to try and keep pushing the industry forward.

There's another new partner today though - Huang announces Nvidia will now partner with GM going forward on all things AI.

"The time for autonomous vehicles has arrived," Huang declares.

Now, Huang turns to the problems behind training data for AI - particularly when it comes to AVs, which understandably require a whole hoard of data to make sure they drive safely.

Now on to data centers - the brains behind AI.

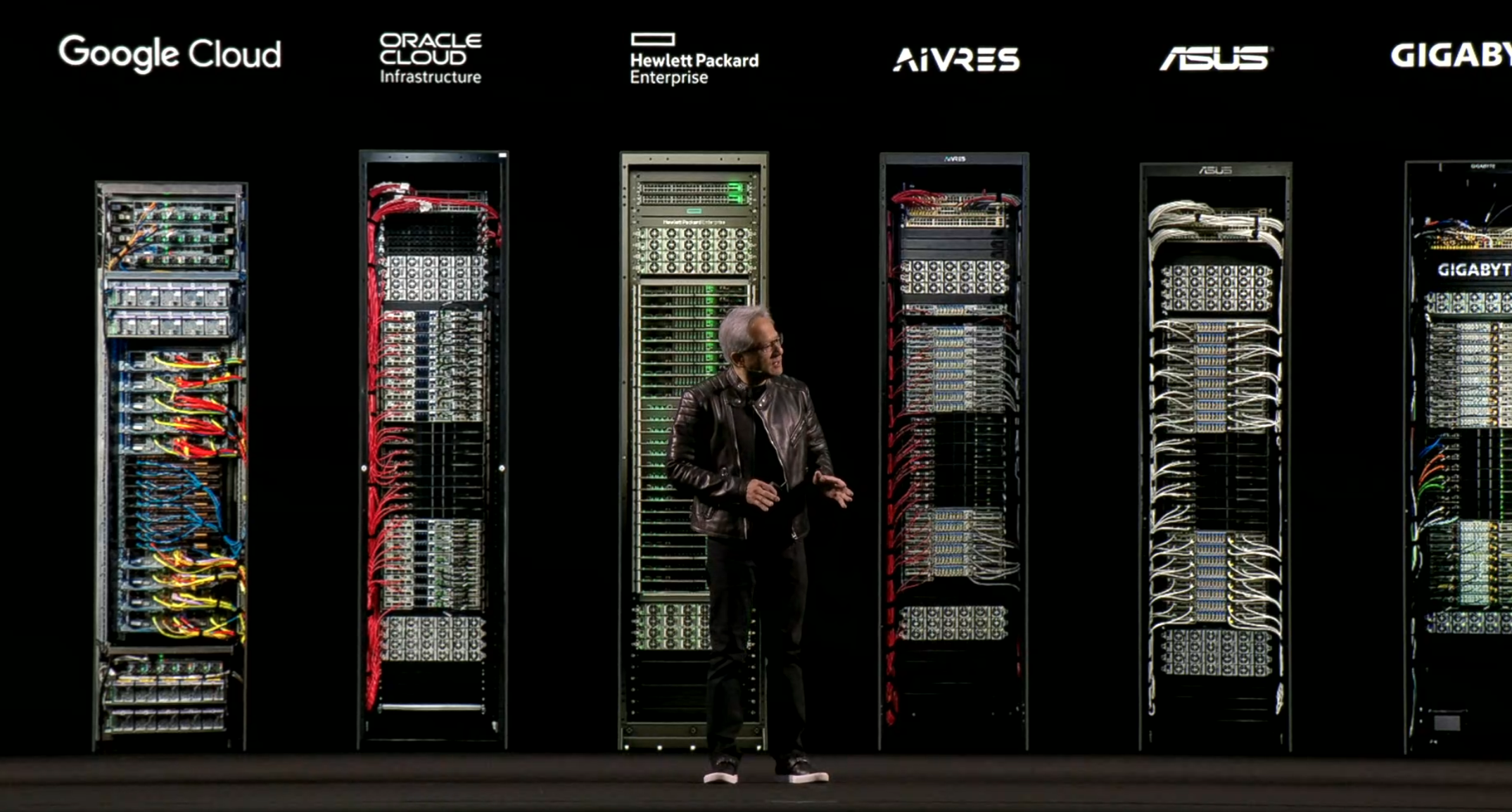

Huang notes Grace Blackwell is now in full production, with OEMs across the board building and selling products with the company's hardware.

Scaling up will be a challenge, Huang notes - but one Nvidia is definitely up for, highlighting some of the NVLink hardware utilizing Blackwell right now - including a one exaflops module in a single rack - some seriously impressive numbers.

"Our goal is to scale up," he notes - highlighting Nvidia's goal of building a frankly super-powered system.

"This is the most extreme scale-up the world has ever seen - the amount of compute seen here," he notes.

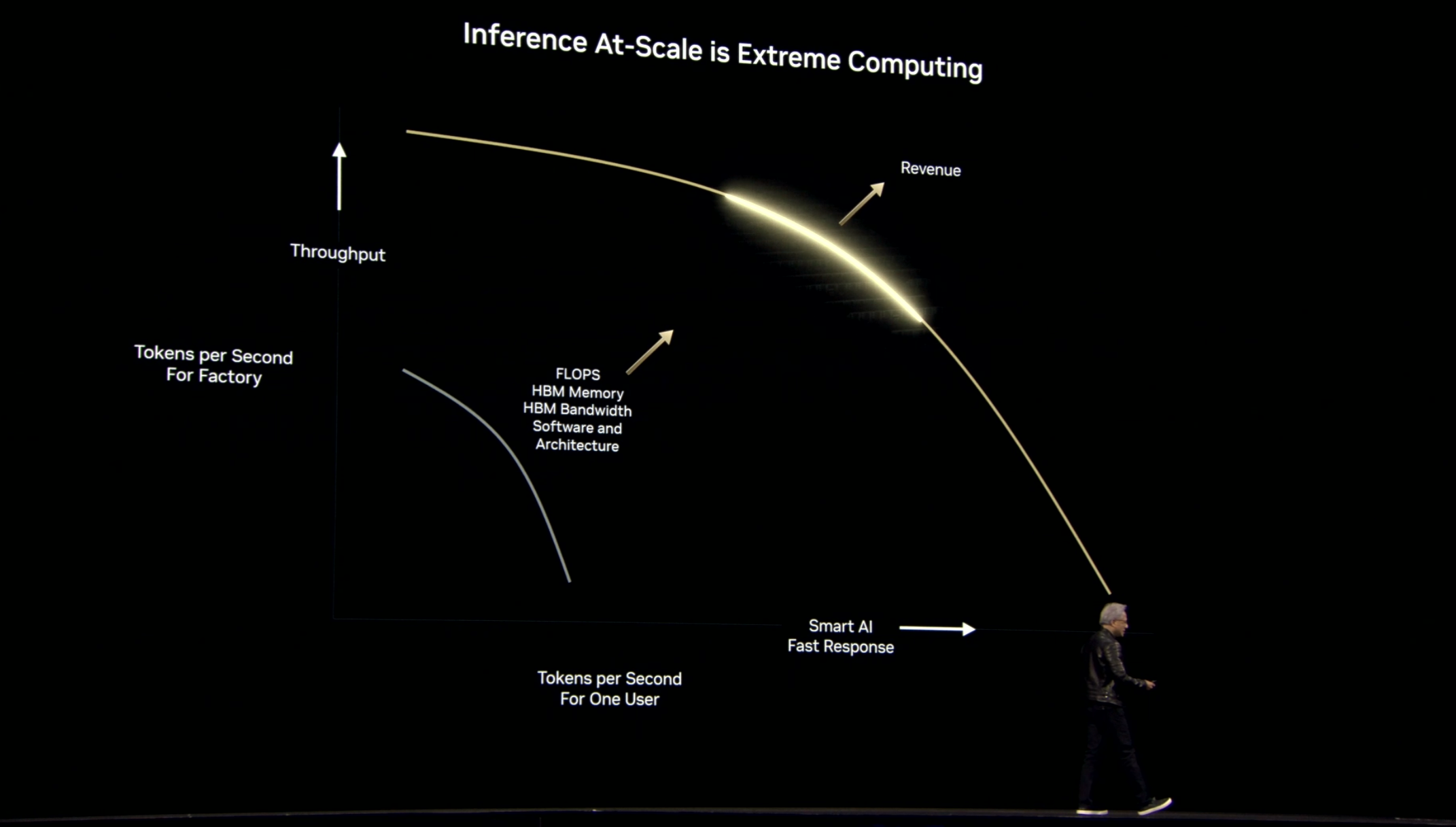

This is all to solve the problem of inference, Huang notes.

Inference at scale is extreme computing, he explains - so making sure your AI is smarter and quick to respond is vital.

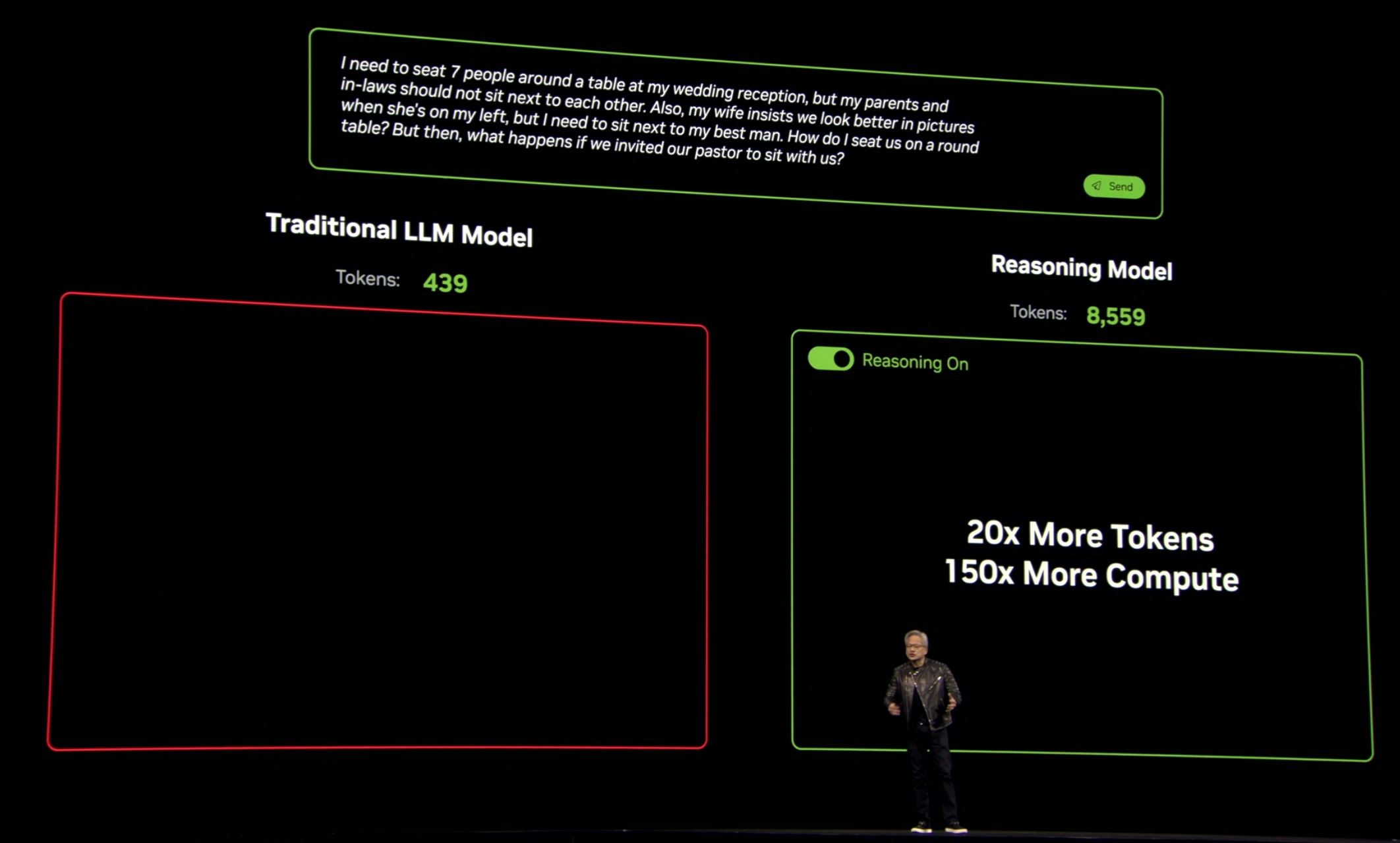

Huang shows us a demo of how a prompt asking about a wedding seating plan sees a huge difference in tokens used, and therefore compute needed, in order to get the right answer.

A reasoning model needs 20x more tokens and 150x more compute than a traditional LLM model - but it does get it right, saving those event headaches, and a load of wasted tokens.

With next-gen models possibly set to feature trillions of parameters, the need for powerful systems such as Nvidia Blackwell NV72 could make all the difference, Huang notes.

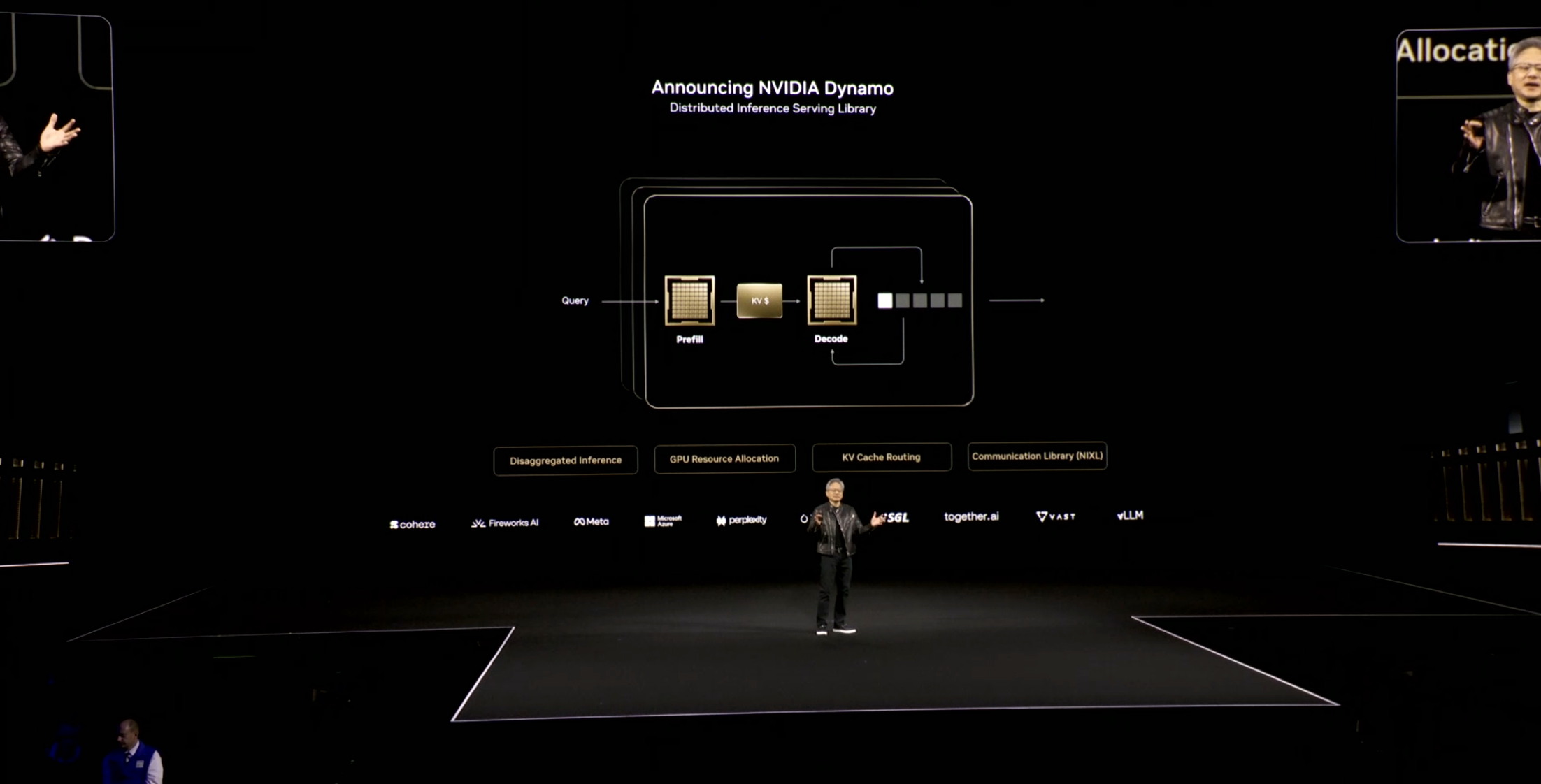

To help solve all of this is the Nvidia Dynamo.

"This is essentially the operating system of an AI factory," Huang notes.

The software of the future will be agent-based, he says, highlighting how "insanely complicated" Dynamo is.

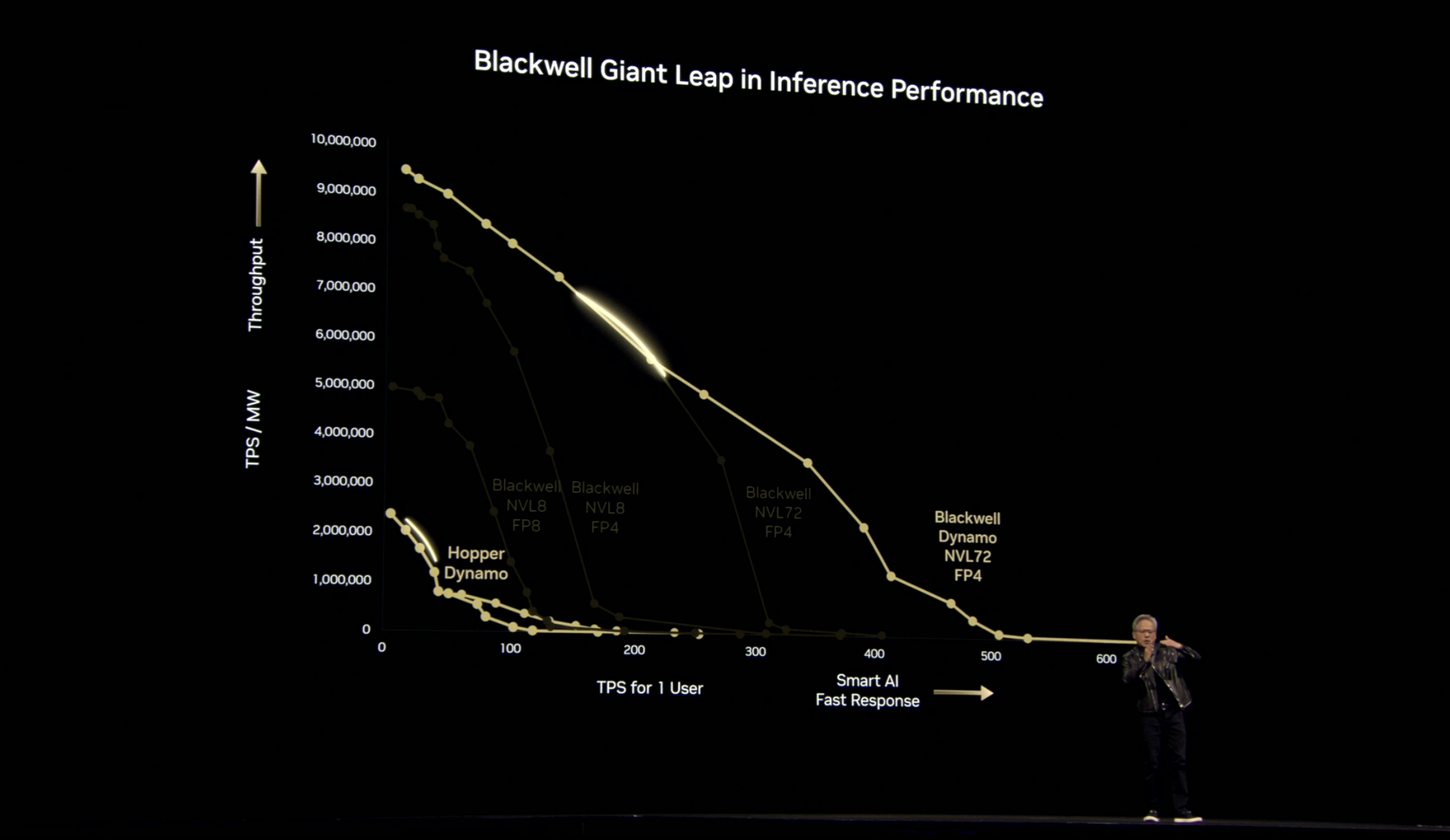

Blackwell understandably offers a "giant leap in inference performance" over Hopper, Huang outlines,.

"We are a power-limited industry," he notes, so Dynamo can push this even further by offering better token-per-second for 1 user, meaning faster responses all round.

Despite the big leap forward, Huang is keen to still push the benefits of Hopper - noting, "there are circumstances where Hopper is fine," to big laughs from the crowd.

"When the technology moves this fast...we really want you to invest in the right versions," he says.

Blackwell offers 40x the inference performance of Hopper, he declares - "It's not less - it's better - the more you buy, the more you save!"

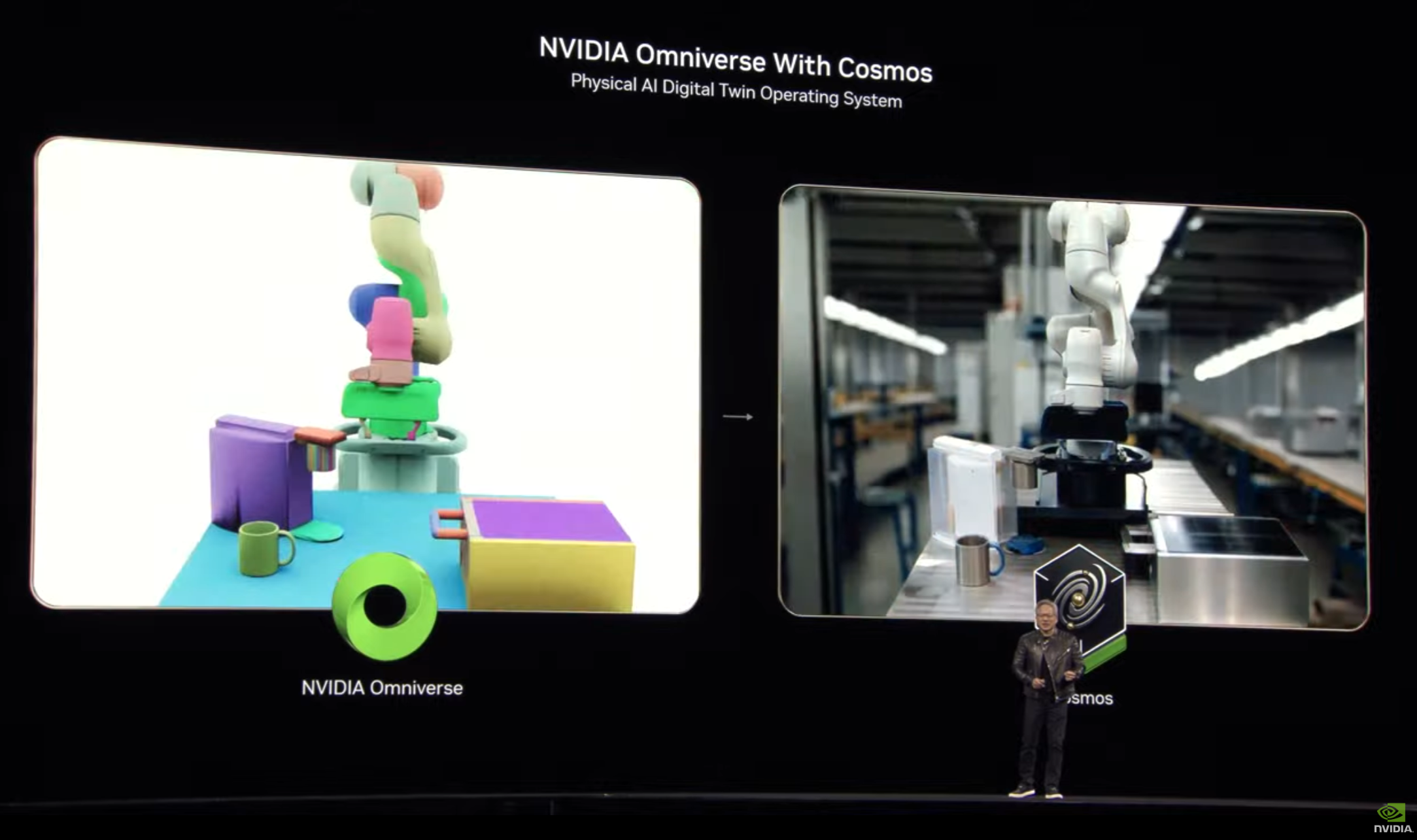

Huang moves on to looking at an actual AI factory - specifically, though, digital twins.

AI factories can be designed and anticipated using Nvidia's Omniverse technology, allowing for optimized layout and efficiency in the quickest (and most collaborative) way.

Huang is back, and says he has a lot to get through - so get ready!

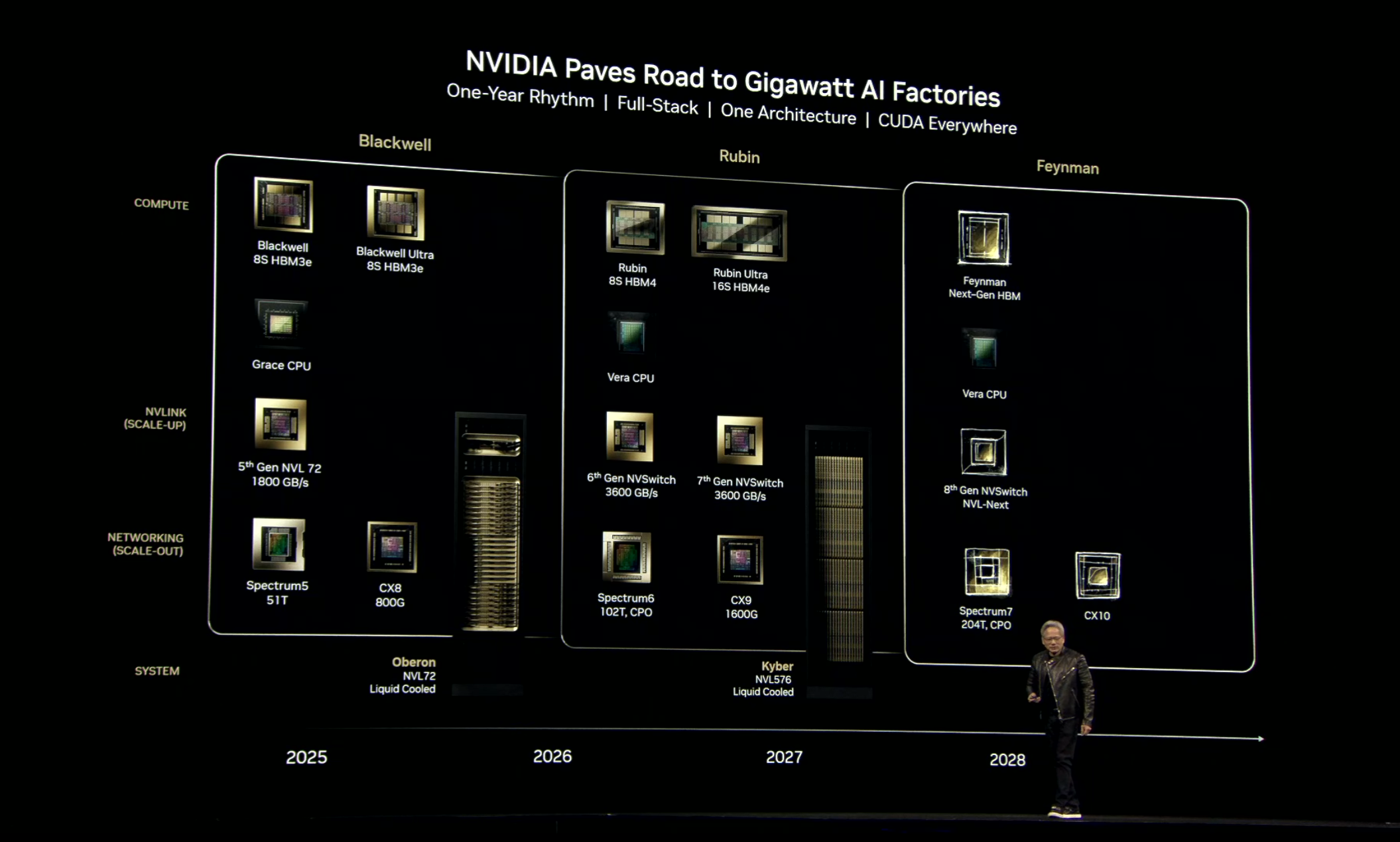

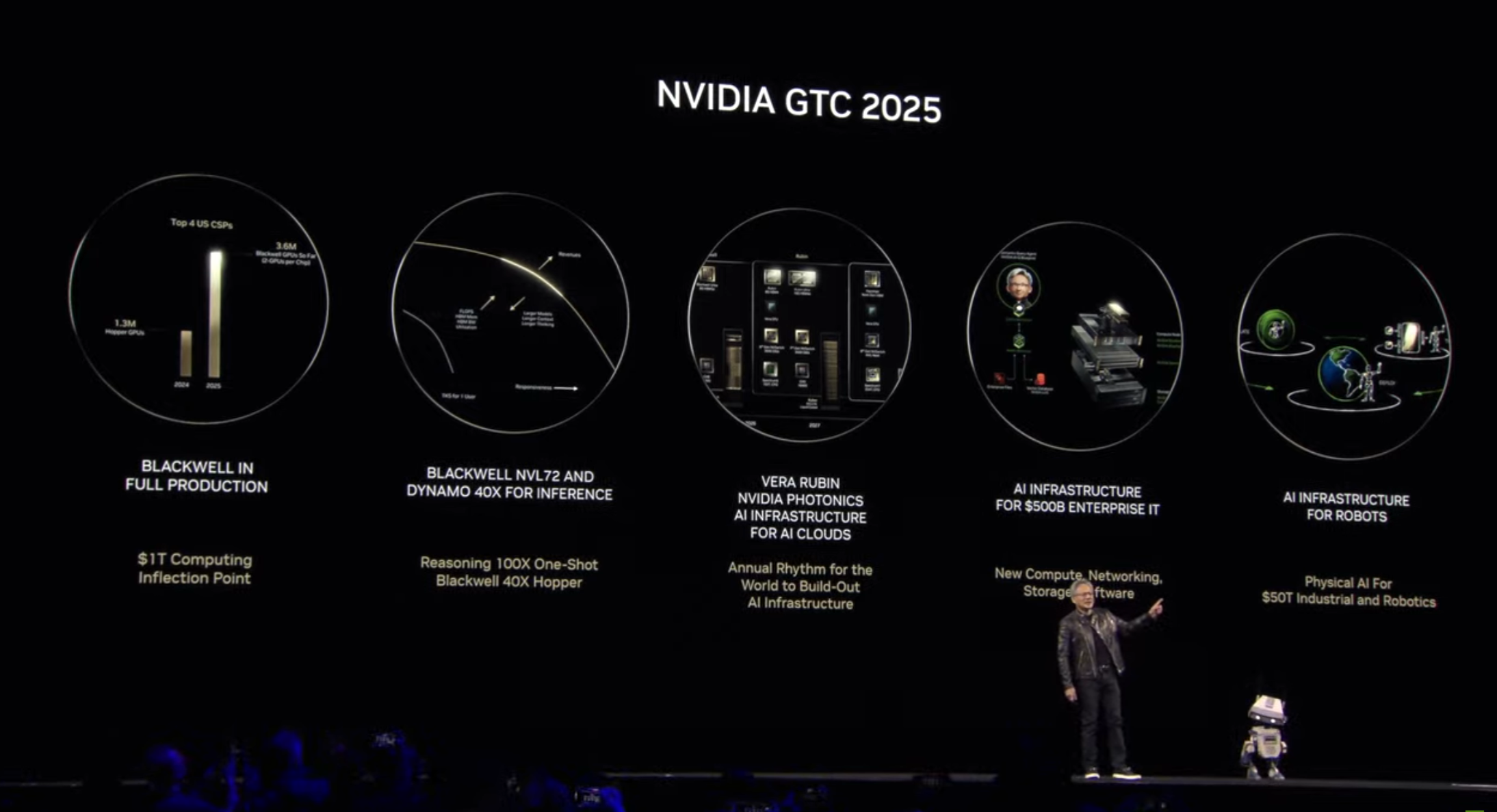

First up, roadmap information - the Blackwell Ultra NVL72 is coming in the second half of 2025, offering some huge leaps forward.

Next up, the Vera Rubin NVL 144 - named after the scientist who discovered dark matter - which will be coming in the second half of 2026.

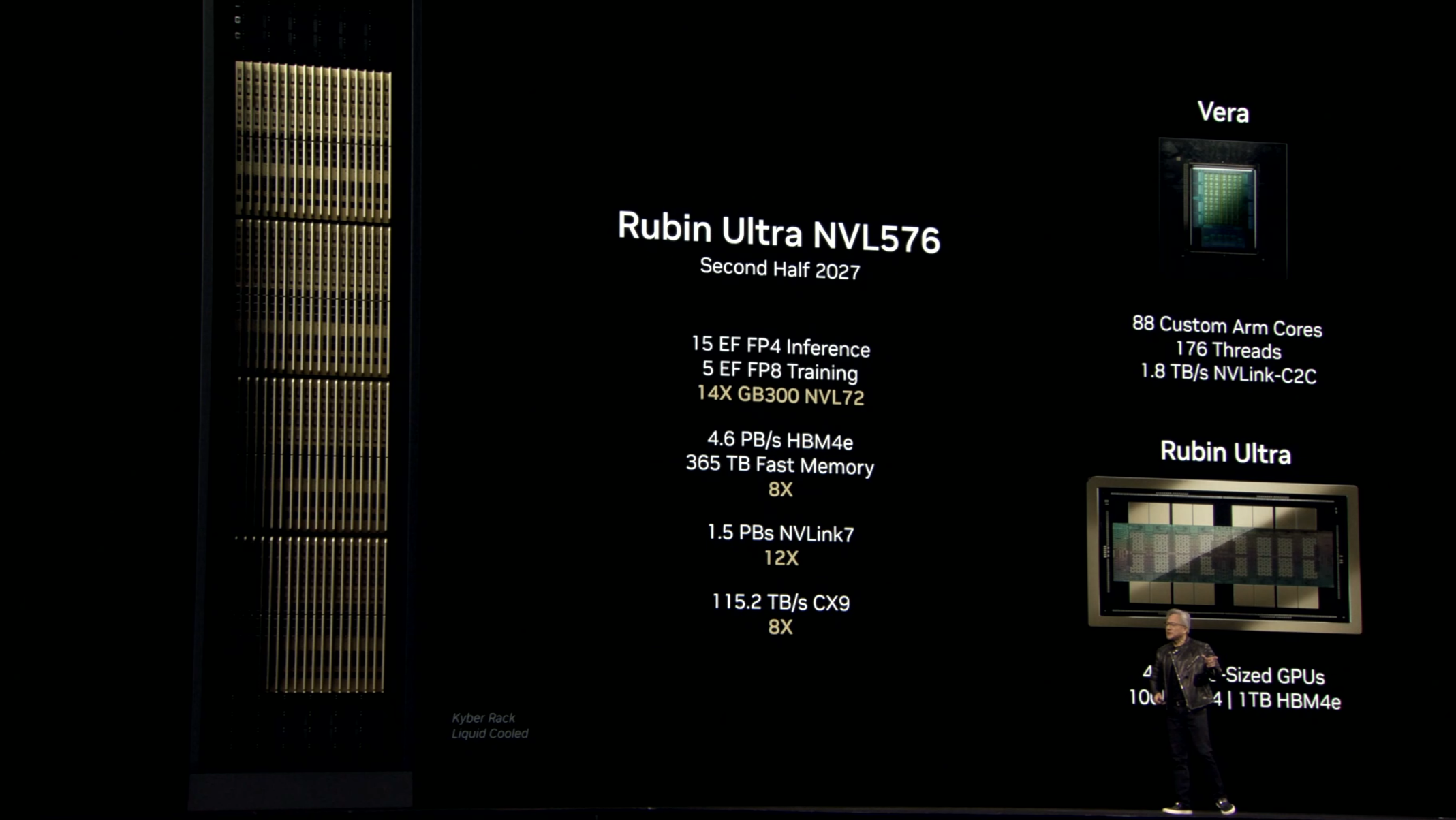

And after that - Rubin Ultra NVL576, coming in the second half of 2027, with some frankly ridiculous hardware, what Huang says is "an extreme scale-up" featuring 2.5 million parts, and connected to 576 GPUs.

Talk about planning for the future - even Huang admits some of this is slightly ridiculous - "but it gives you an idea of the pace at which we are moving!"

Where do you go after that?

Huang moves on to other parts of the Nvidia product line-up - first up, ethernet.

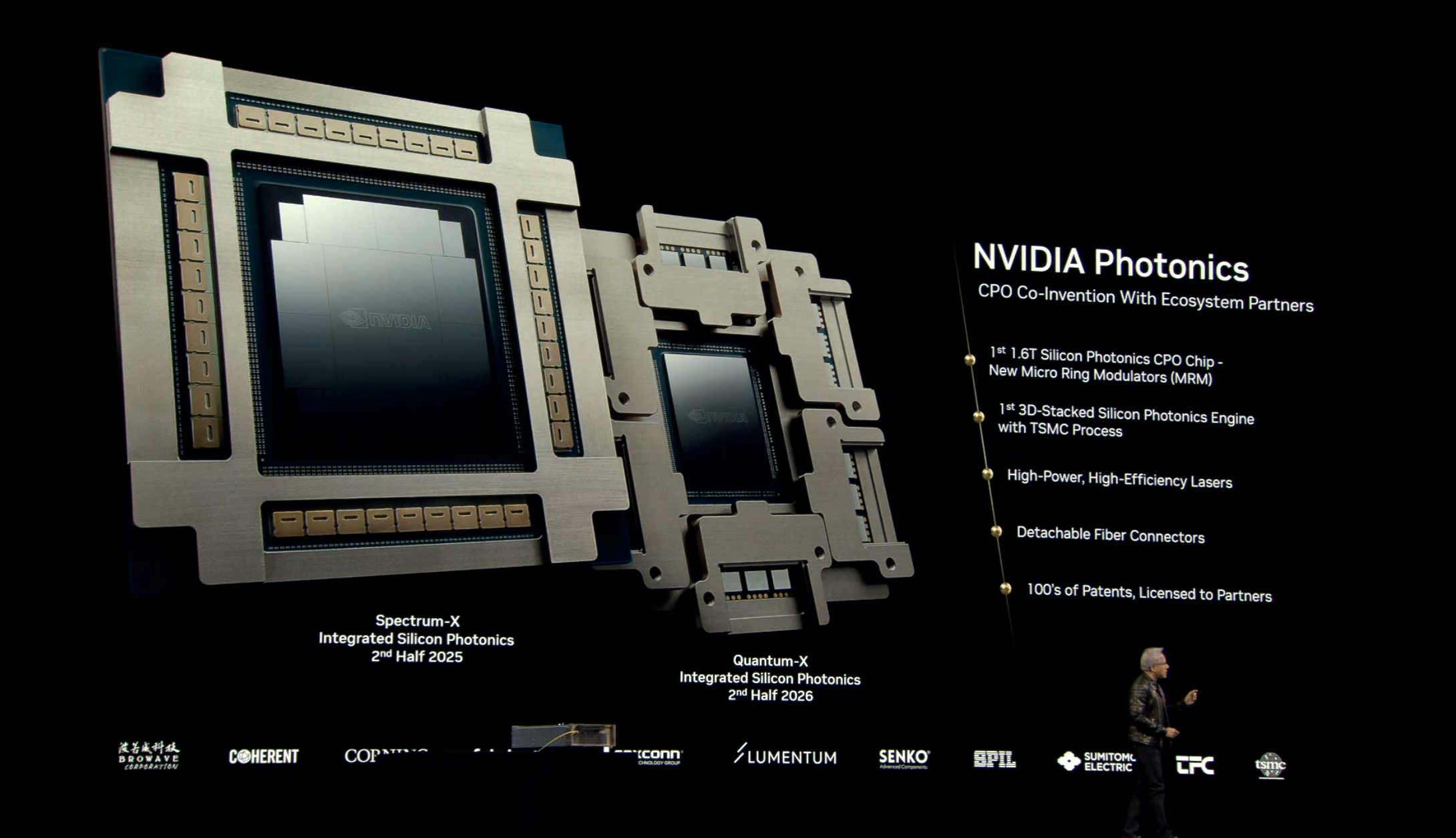

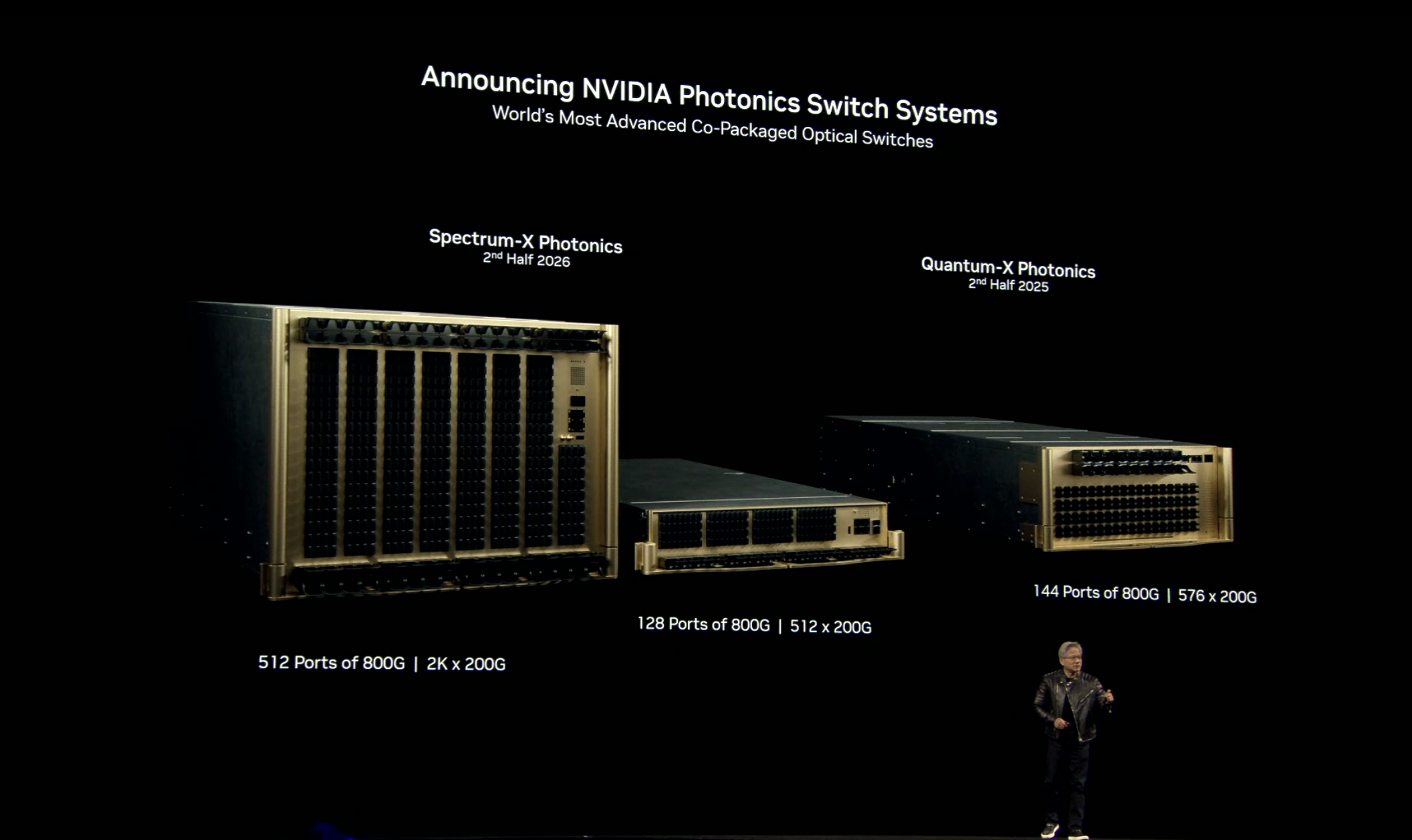

Improving the network itself will help make the whole process smoother, he notes, announcing the new Spectrum-X Silicon Photonics Ethernet switch, offering 1.6 Terabits per second per port switches to deliver 3.5x energy savings and 10x resilience in AI Factories.

We're then treated to the amazing sight of one of the world's richest men struggling to untangle a cable as he looks to show us exactly how the new systems will work.

Say what you want about Jensen, he knows how to stay human!

Here's a look at the entire Nvidia roadmap for the next few years - after Rubin, we're getting a new generation named after Richard Feynman!

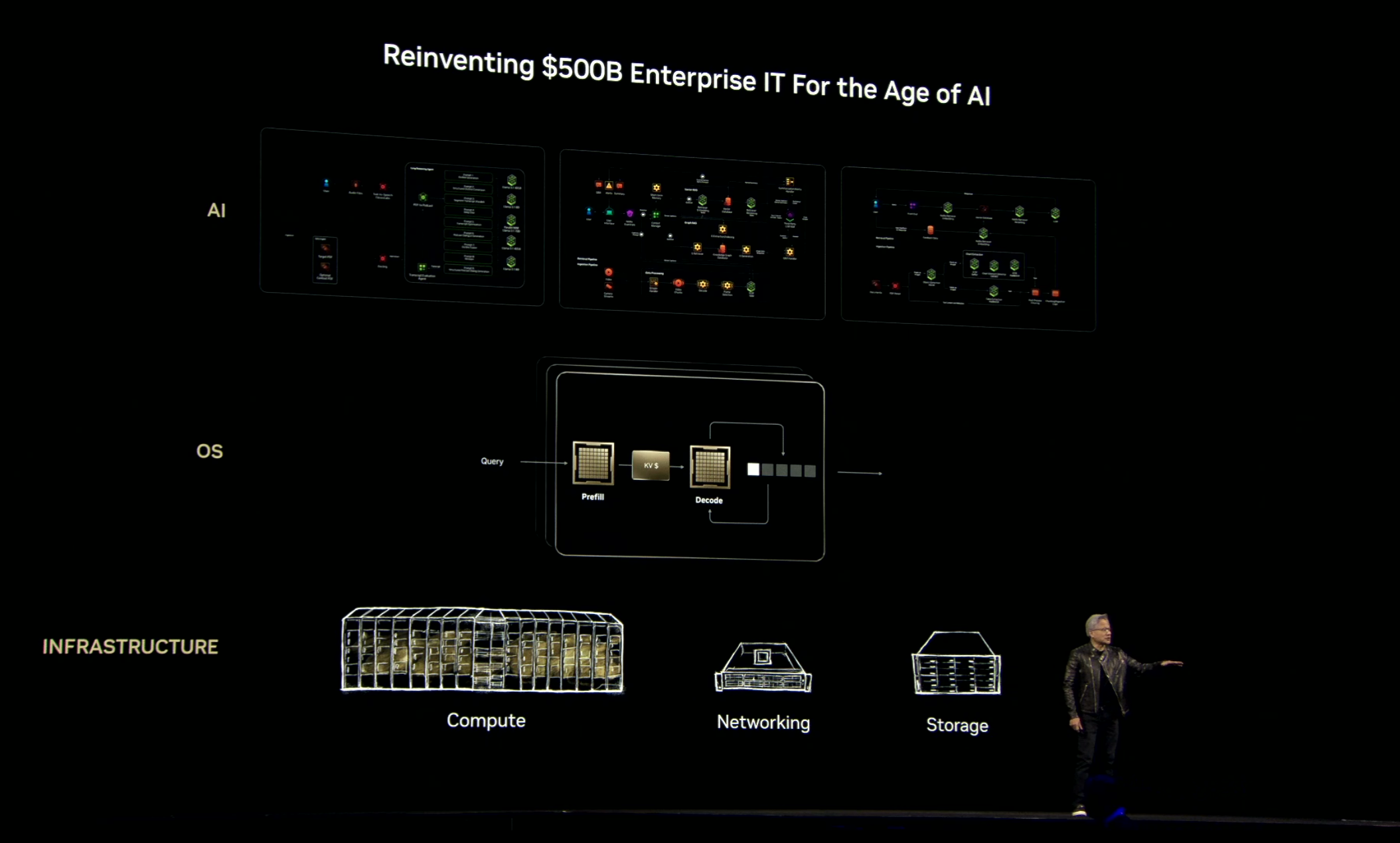

Now, we're moving on to enterprise AI - which is set for a huge shake-up as workflows get smarter and more demanding.

AI agents will be everywhere, but how enterprises run it will be very different he notes.

In order to cope with this, we're going to need a whole host of new computing devices...

Well...we'd like to bring you the big finish here, but unfortunately the stream of the keynote has gone offline abruptly!

Not sure what happened there - but we're back! Phew...

Jensen is talking about the new DGX Station computing offering, describing it as, "the computer of the age of AI," he says - this is what AI will run on.

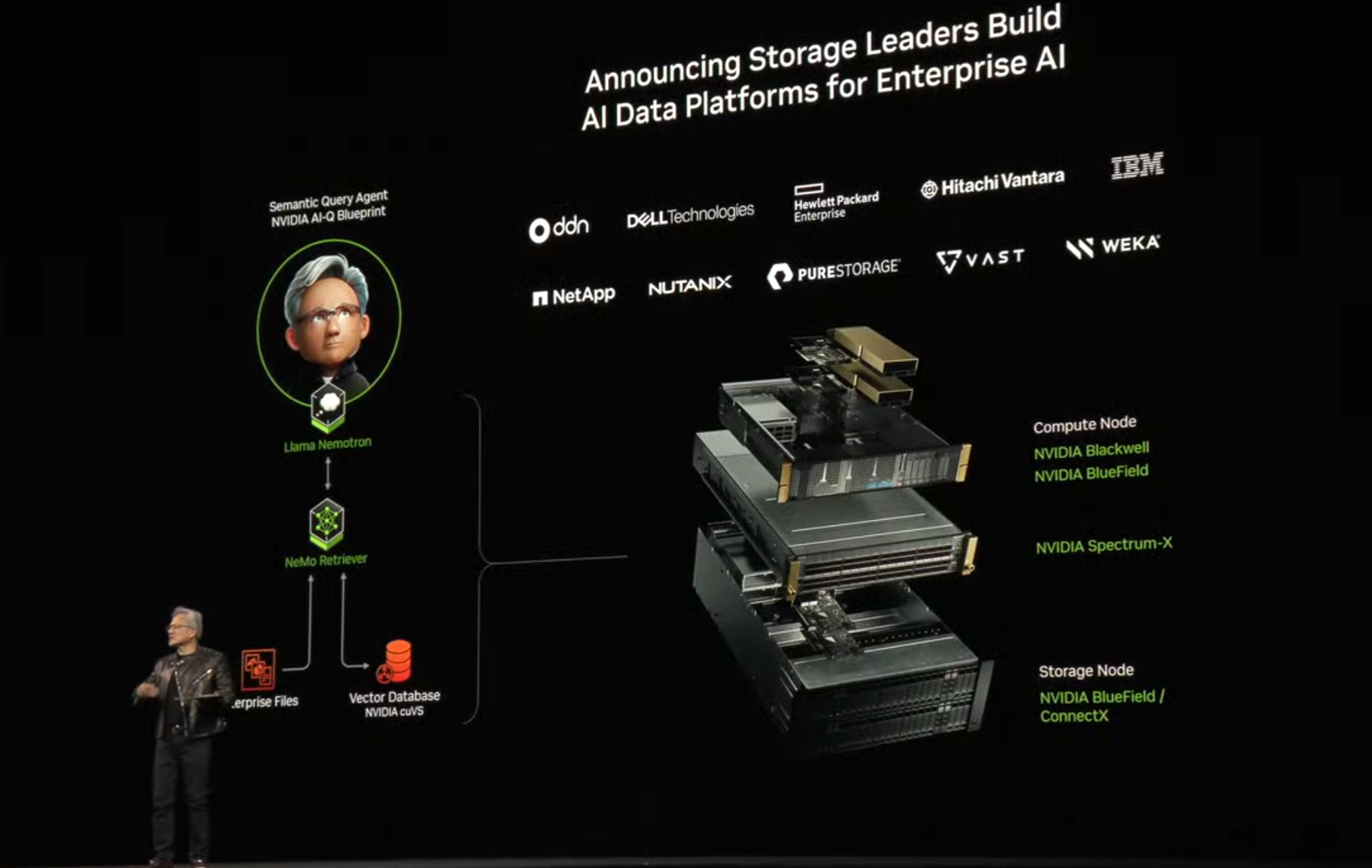

Nvidia is also working on boosting storage for the AI industry, working with a host of partners on a number of new releases, including a whole product line from Dell.

"For the very first time, your storage stack will be GPU-accelerated," he notes.

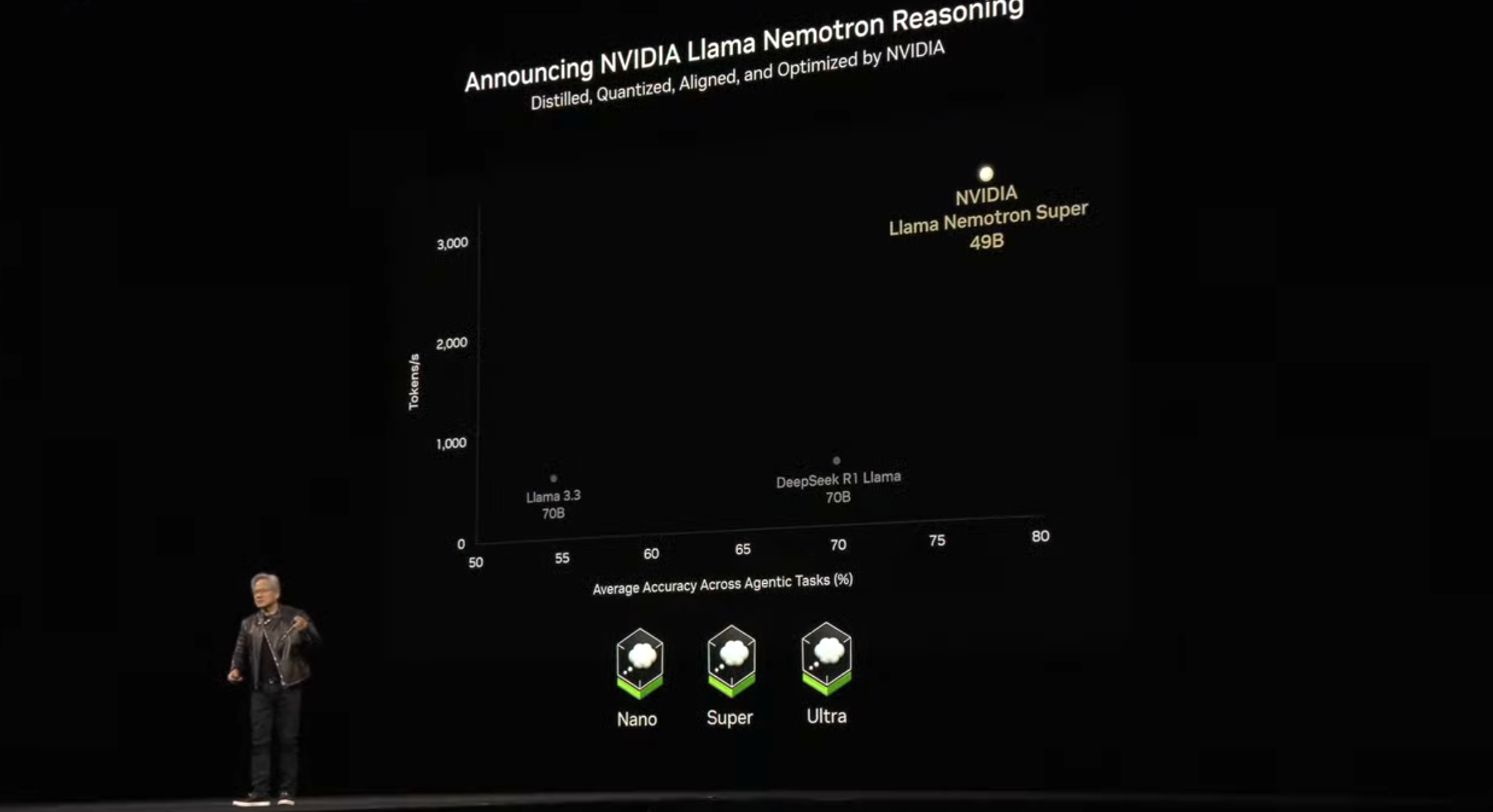

We're really rattling along now - there's also Nvidia Llama Nemotron Reasoning, an AI model "that anyone can run", Huang notes.

Part of NIMs, it can run on any platform, Huang claims, naming a host of partners building their AI offerings using Nvidia's services.

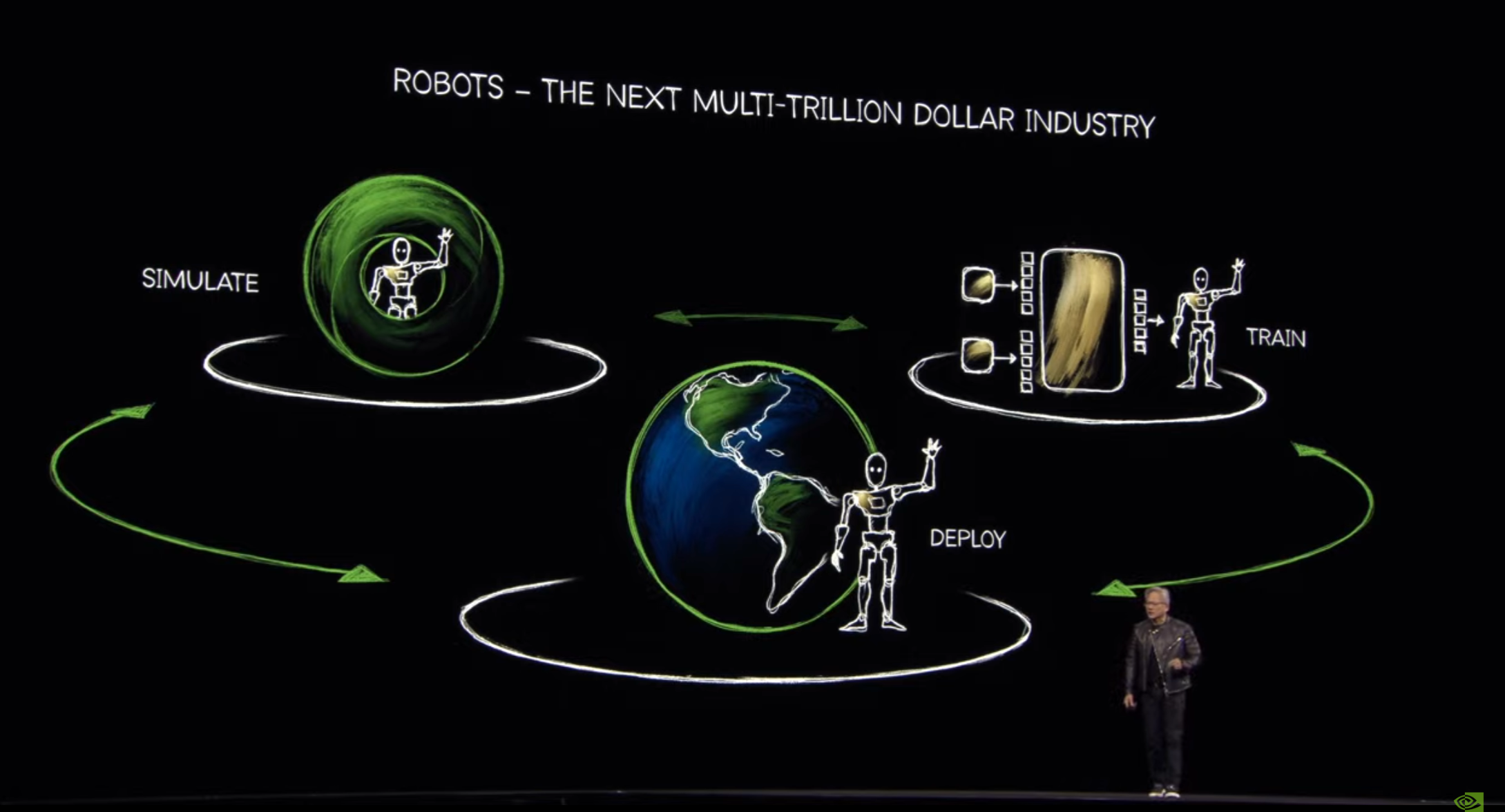

Now we're on to robotics - the big finish!

"The time has come for robots," Huang declares. "We know very clearly that the world has a severe shortage of human laborers - 50 million short."

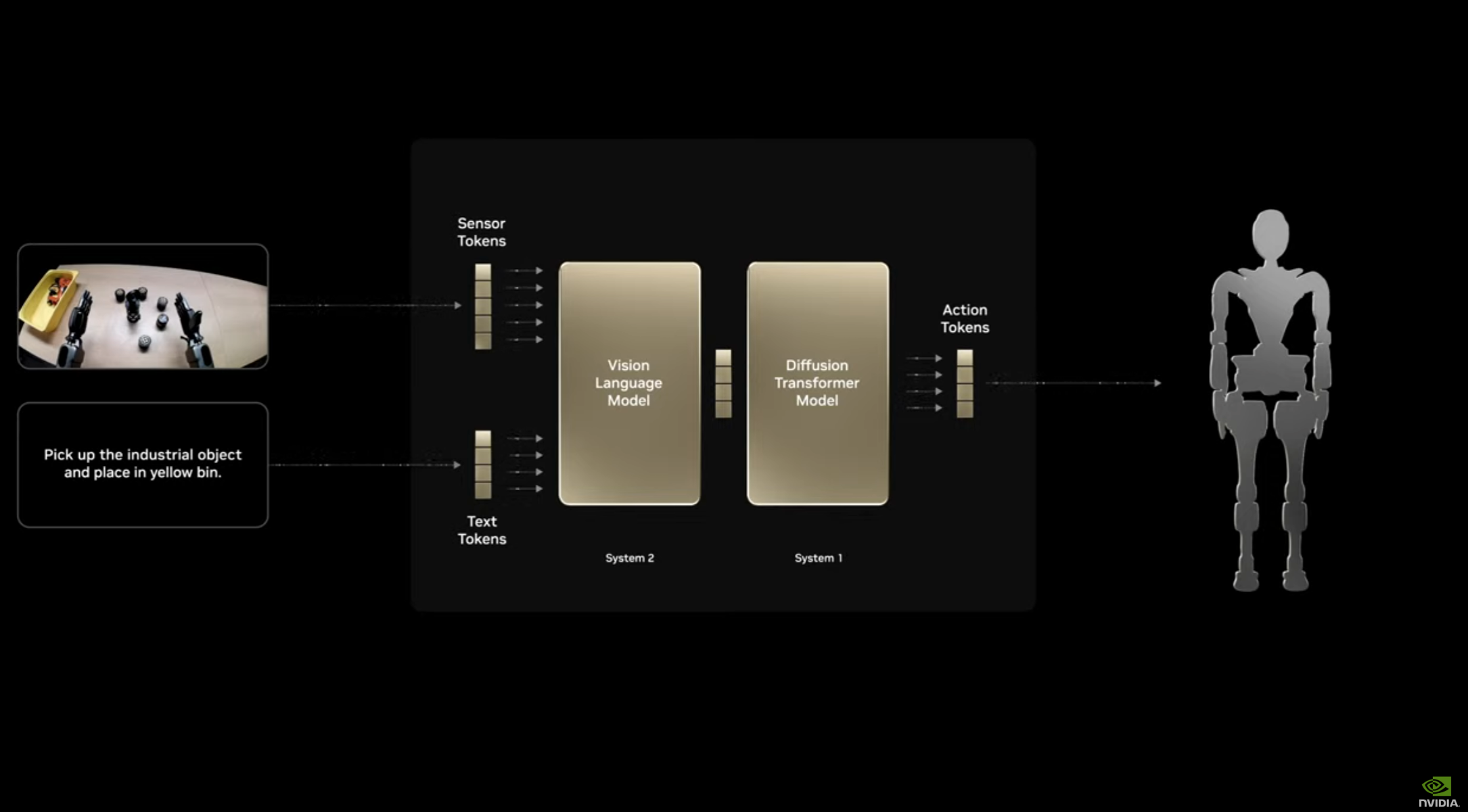

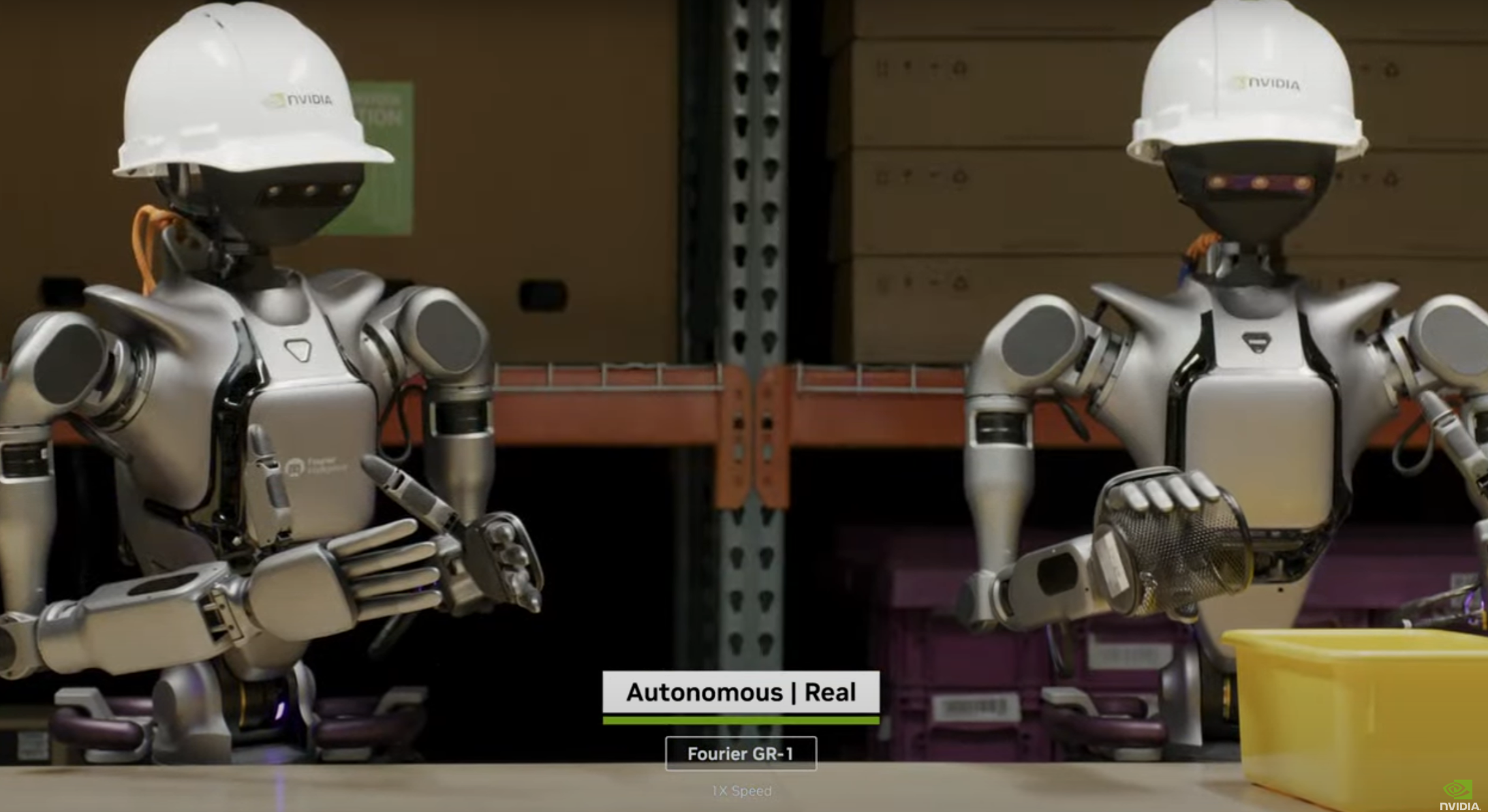

To help combat this, Huang unveils Nvidia Isaac GROOT N1, "the world’s first open Humanoid Robot foundation model", alongside several other important development tools.

There's also blueprints, including the NVIDIA Isaac GR00T Blueprint for generating synthetic data, which help generate large, detailed synthetic data sets needed for robot development which would be prohibitively expensive to gather in real life.

Not terrifying at all, right?

"Physical AI and robotics are moving at such a high pace - everyone should pay attention," Huang notes.

Huang moves on to some Omniverse demos - first off, with Cosmos, the operating system for physical AI digital twins.

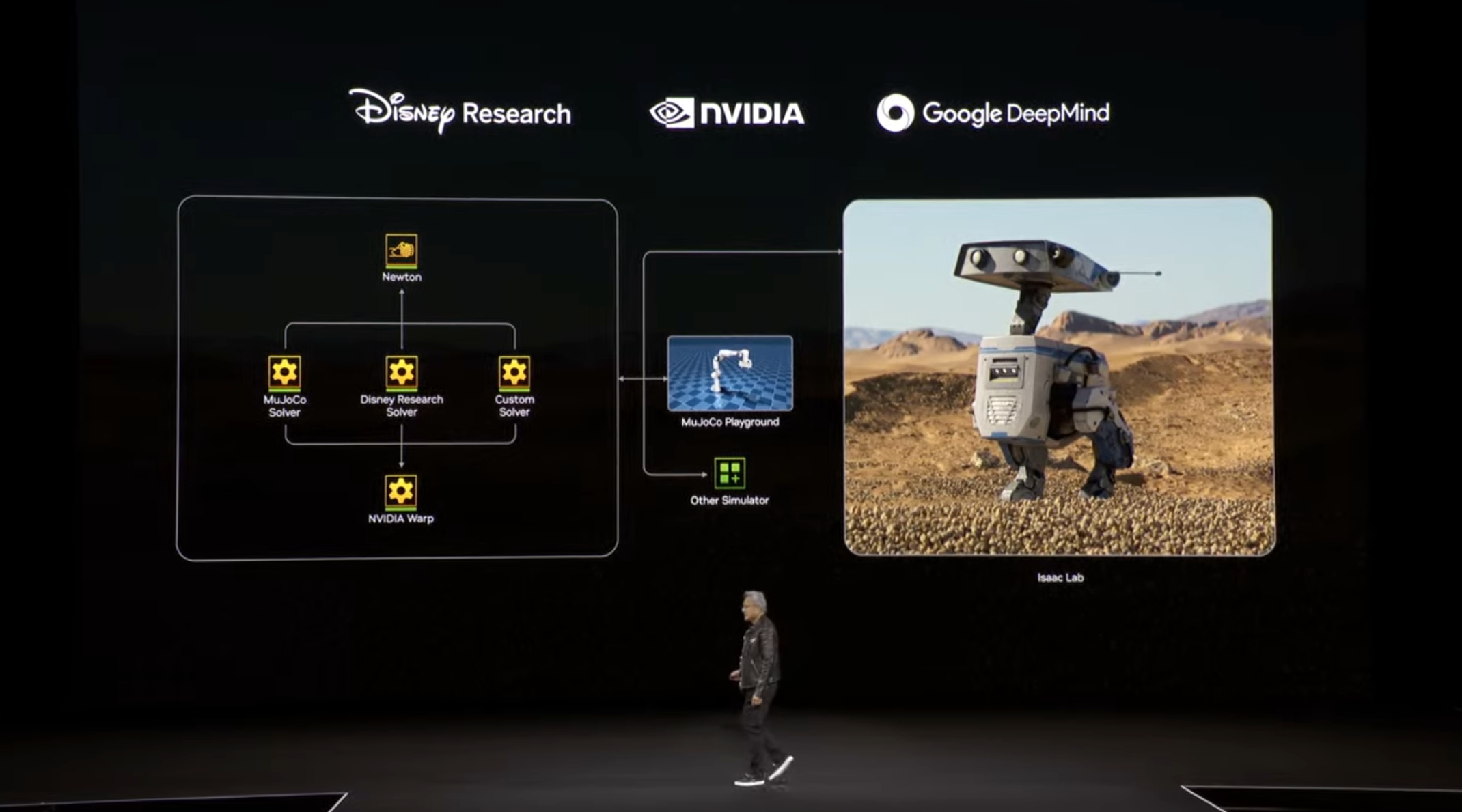

Nvidia is teaming up with DeepMind and Disney Research for a new platform

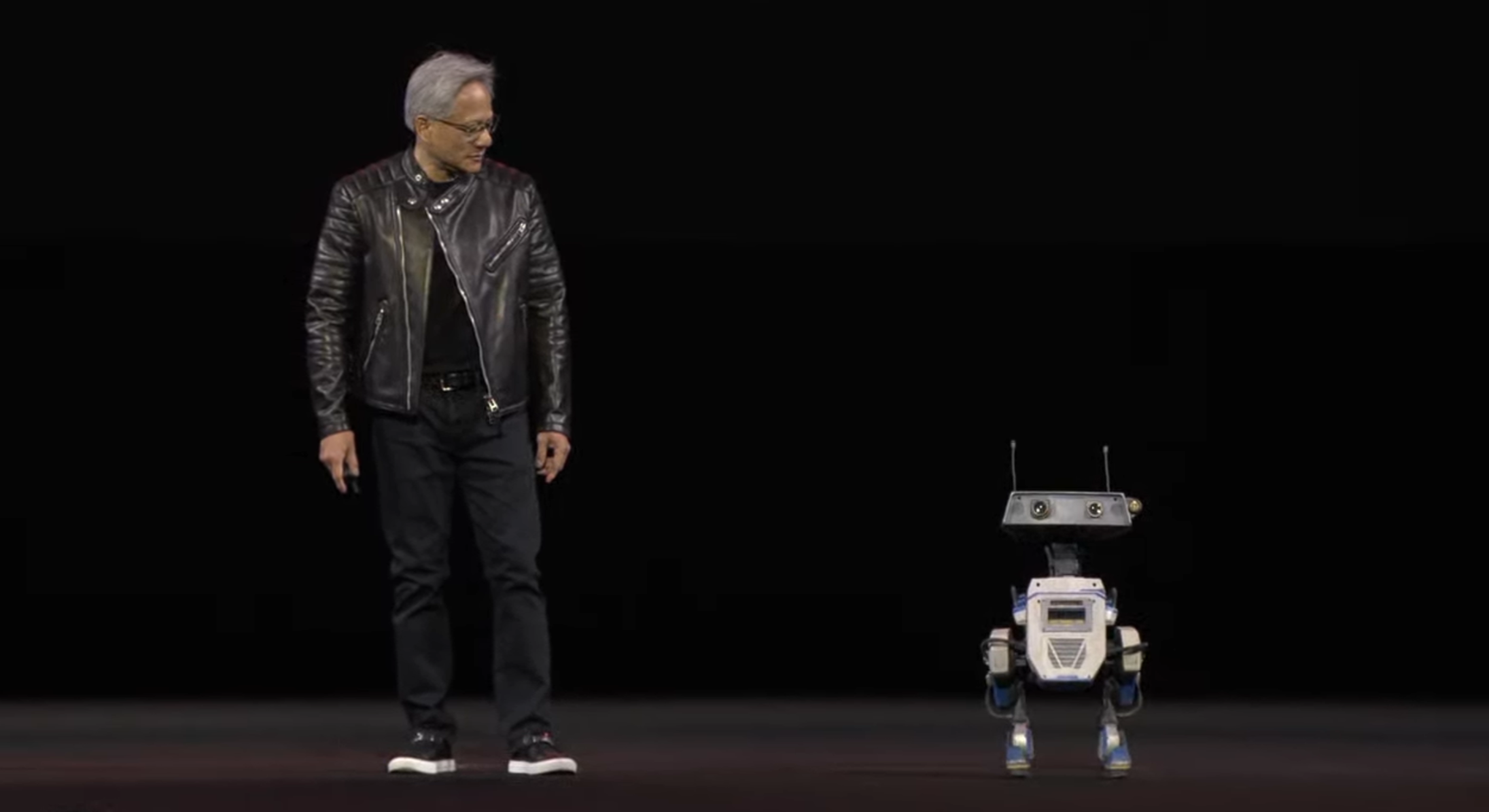

We're shown an incredibly detailed (and cute) demo video of a Star Wars-esque robot exploring a desert environment, before appearing onstage!

Jensen and his new robot offspring look very happy...

One final news announcement - GROOT N1 is open-source!

It's wrap-up time, and Huang recaps everything we've heard about over the last few hours...it's been a journey, hey?

And that's a wrap! It was an epic start to Nvidia GTC 2025, so we're off to digest all the news and announcements - stay tuned to TechRadar Pro for all the updates...

After yesterday's Nvidia GTC 2025 keynote, we thought there were a few things that weren't mentioned, or deserved highlighting - here's our top picks...

First up, if you missed the keynote, don't worry - you can view a full replay of Jensen Huang's time on stage here!

There was obviously a lot of content in Jensen Huang's two and a half hour keynote, even if the man himself admitted he had to race through a lot of it due to time constraints...

With that in mind, here's a recap of some of the biggest news you might have missed from yesterday's keynote.

NVAQC hopes to create quantum ‘AI supercomputers’ with new research center

Nvidia announced its Accelerated Quantum Research Center (NVAQC), housing a ‘supercomputer’ with 576 (!) Blackwell GPUs to drive breakthroughs in quantum hardware.

Tim Costa, Nvidia’s senior director of computer-aided engineering, quantum and CUDA-X, said in a press release that “the center will be a place for large-scale simulations of quantum algorithms and hardware, tight integration of quantum processors, and both training and deployment of AI models for quantum.”

William Oliver, director of MIT’s Center for Quantum Engineering, said it’s “a powerful tool that will be instrumental in ushering in the next generation of research across the entire quantum ecosystem.”

Nvidia-Certified Systems are getting enterprise storage certification, plus more on agentic AI

Next, a boon for enterprises and SMBs as Nvidia announced in a press release that its Certified Systems programme, which hosts over 50 partners and 500 system configurations, will, going forward, enforce “stringent performance and scalability requirements” geared towards AI workload implementation and factory construction.

It also announced an AI Data Platform, a "customizable reference design” for enterprise-class infrastructure geared towards hosting AI agents.

Oracle and Nvidia want to make agentic AI implementation easier

Next up, Jensen Huang, and Oracle CEO Safra Catz, made a joint announcement that Oracle Cloud Infrastructure and Nvidia AI Enterprise would integrate, making over 160 AI tools and NIM microservers available natively through OCI, reducing the effort for organizations to deploy models

“Together, we help enterprises innovate with agentic AI to deliver amazing things for their customers and partners,” said Huang in a press release.

“NVIDIA’s offerings, paired with OCI’s flexibility, scalability, performance and security, will speed AI adoption and help customers get more value from their data,” added Catz.

Nvidia’s AI-Q Blueprint is bringing agent collaboration to enterprise

A new Nvidia Blueprint - predefined AI workflows aimed at developers - AI-Q, is enabling enterprises to develop agents that “can use reasoning to unlock knowledge in enterprise data”.

In a press release, it said that AI-Q, powered by its new open source AgentIQ software library “integrates fast multimodal; extraction and world-class retrieval” using Nvidia’s NeMo Retriever and NIM microservices, plus agentic systems.

Nvidia is using a ‘digital twin’ of Earth to improve weather forecasting

In case you missed it, Nvidia’s new Omniverse Blueprint for Earth-2 weather analytics model hopes to improve weather forecasting in the wake of “severe weather-related events” that have had a $2 trillion impact on the global economy in the last decade.

““The NVIDIA Omiverse Blueprint for Earth-2 will help industries around the world prepare for — and mitigate — climate change and weather-related disasters,” said Huang.

Nvidia building custom AI agents for telcos

Telcos are swamped with over 3,800 terabytes of data every minute, if we believe Nvidia. So, it also took the time to announce that its partners are developing custom LLMs trained on telco data (that it calls large telcom models - LTMS) using Nvidia’s NIM and NeMo’s microservices as part of new AI agents tailored for network operation management.

SoftBank and Tech Mahindra have already built new agents, while Amdocs, BubbleRAN and ServiceNow are reportedly working on their own agents using Nvidia AI Enterprise.

Nvidia enlisting PC manufacturers to produce a ‘supercomputer-class’ workstation

We also covered the DGX Station, Nvidia’s upcoming tower supercomputer with an Arm processor, the GB300 Grace Blackwell Ultra Desktop Superchip, inside, plus 784GB of unified system memory. In the announcement, the company confirmed that Asus, Dell, HP, Lambda, Boxx and Supermicro would partner to produce their own configurations.

In our coverage, speculation remained over the exact performance of the chip, as Nvidia hasn’t provided details of what kind of or how many Arm CPU cores it, or the GPU subsystem, are using.

We do know that it can deliver 800Gb/s network speeds via its proprietary ConnectX-8 SuperNIC technology.

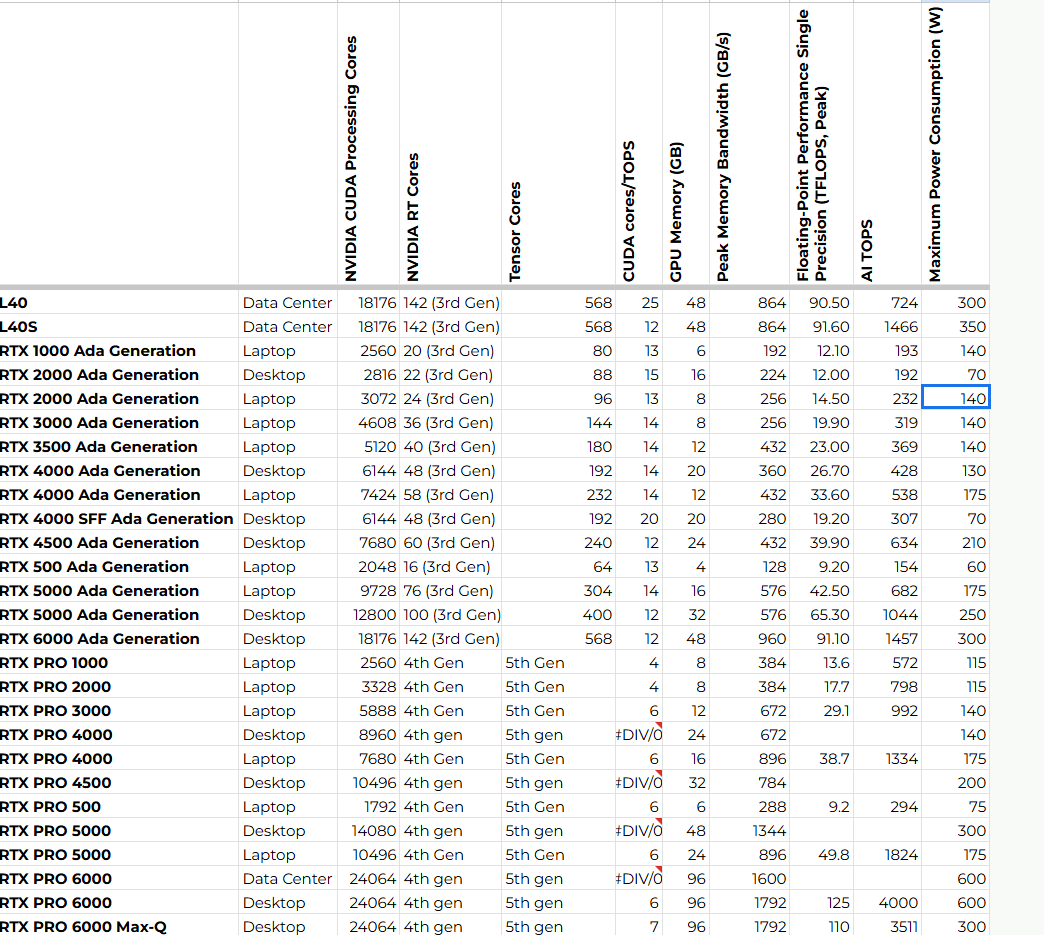

Nvidia launches a dozen professional GPUs

Nvidia also launched twelve entries in a new RTX Pro line of GPUs that go beyond what its consumer models are capable of. For instance, there are three RTX Pro 6000 variants that offer 96GB of ECC GDDR7 memory - four times more than the RTX 5090, the latest and greatest consumer model.

Other GPUs in the new Pro line are aimed at professional workstations, data centers, and servers, and laptops.

Here's some of the best photos from yesterday's keynote - CEO Jensen Huang took center stage for much of it, but was later upstaged by a certain robot...

The entire list of all professional GPUs available from Nvidia

This is the list of all the professional GPUs available from Nvidia that target the data centre, laptop and desktop markets.

There are 27 at the time of writing including the 12 launched yesterday with the Blackwell architecture.

Nvidia has already published their specification sheets but important performance data (floating point and AI) as well as TR and Tensor core counts are still missing.

We’ve reached out to Nvidia to get these numbers.

GROOT N1 AI model for generalist robotics launched

Nvidia claims that “smart, humanoid robots” are closer than ever with the launch of GROOT N1, its latest advanced AI model.

“The age of generalist robotics is here,” said Huang. “With NVIDIA Isaac GR00T N1 and new data-generation and robot-learning frameworks, robotics developers everywhere will open the next frontier in the age of AI.”

The model has a dual-system architecture — “System-1” emulates fast human reflexes, while “System 2” is modelled after “"deliberate, methodical decision-making."

First outing for Kioxia’s 122.88TB SSD

GTC is - fortunately - not just about graphics cards and dancing robots.

There’s the serious stuff as well, like Kioxia’s LC9, a 122.88TB SSD which has the same form factor as a small hard disk drive (2.5-inch).

While everyone fawns at compute, tokens, TOPS and FLOPS, few look at storage as being the next big thing.

Both affordable, large capacity (but slow) and super fast expensive (but small) storage have their roles to play in Generative AI as they provide the foundation for the bits and bytes that will feed training and inference processes.

That’s what is driving vendors like Kioxia or Sandisk to release bigger SSDs (or Seagate with bigger hard drives), none of which are destined to the consumer market.

PNY is first video card manufacturer to launch RTX Pro Blackwell cards

PNY announced this morning that it will sell professional graphics cards based on the new Nvidia Blackwell GPUs announced yesterday at GTC.

That includes the RTX Pro 6000 BWE (Blackwell Workstation Edition), the RTX Pro 6000 Max-Q BWE, the RTX Pro 5000 Blackwell and the RTX Pro 4500 Blackwell.

No sign of the RTX Pro 4000 and the RTX Pro 6000 Data Center edition - we've reached out to PNY to find out whether they would sell these last two SKU and will report back with any updates.

So what is it with the RTX Pro 6000 Blackwell Max-Q Workstation Edition?

Eagle-eyed TechRadar Pro readers may have noticed that Nvidia has released a Max-Q version of the RTX Pro 6000 Blackwell Workstation Edition.

We did some further digging and found out that it has the same specifications except for the maximum power consumption and the performance which is about 10% lower.

Max-Q is a technology Nvidia used in laptop GPUs to reach maximum efficiency (i.e. the right balance of power consumption and performance).

Why did Nvidia launch such a SKU and what does it say about the future of the Blackwell family? We wonder.

That's a wrap on day two of Nvidia GTC 2025! Check out all of our updates below, and you can see a full list of coverage at the top of the page.

Day three of Nvidia GTC 2025 is set to kick off soon, with a focus on quantum computing, but while you get ready for that, how about another recap?

Other websites within the Future portfolio have been covering GTC 2025 as well, a sign of the growing importance of the event for anyone interested in technology - so here's what you might have missed

From PC Gamer

Nvidia's GTC keynote inevitably went all in on AI but I'm definitely here for the Isaac GR00T robots

From TomsHardware

Nvidia unveils DGX Station workstation PCs with GB300 Blackwell Ultra inside

Nvidia’s new silicon photonics-based 400 Tb/s switch platforms enable clusters with millions of GPUs

Nvidia CEO stops by Denny's food truck to eat and serve Nvidia Breakfast Bytes before GTC 2025

The most viewed videos of GTC 2025

Jensen Huang’s Keynote at GTC 2025 was, not surprisingly, the most viewed video of the event so far - the recap of the entire session is almost 700,000 views at the time of writing.

However, it’s only one of many videos of the event - here are the top five videos from GTC right now...

Nvidia Isaac GR00T N1 (96K views)

https://www.youtube.com/watch?v=m1CH-mgpdYg

Robots leaning to be robots (24K views)

https://www.youtube.com/watch?v=S4tvirlG8sQ

Introducing DGX Spark, formerly known as Project Digits (23K views)

https://www.youtube.com/watch?v=6p4U1kSiegg

The building blocks of AI or how to have fun with tokens (12K views)

https://www.youtube.com/watch?v=TEGAQxg1gVA

Accelerating computational engineering (7.3K views)